In this blog, we are going to cover Reading and Writing Data in Azure Databricks. Azure Databricks supports day-to-day data-handling functions, such as reading, writing, and querying.

Topics we’ll Cover:

- Azure Databricks

- Types to read and write data in data bricks

- Table batch read and write

- Perform read and write operations in Azure Databricks

We use Azure Databricks to read multiple file types, both with and without a Schema. Combine inputs from files and data stores, such as Azure SQL Database. Transform and store that data for advanced analytics.

What is Azure Databricks

Azure Databricks is a data analytics platform optimized for the Microsoft Azure cloud services platform. Azure Databricks offers three environments for developing data-intensive applications: Databricks SQL, Databricks Data Science & Engineering, and Databricks Machine Learning.

Check out our related blog here: Azure Databricks For Beginners

Azure Databricks, is a fully managed service that provides powerful ETL, analytics, and machine learning capabilities. Unlike other vendors, it is a first-party service on Azure that integrates seamlessly with other Azure services such as event hubs and Cosmos DB.

Types to Read and Write the Data in Azure Databricks

- CSV Files

- JSON Files

- Parquet Files

CSV Files

When reading CSV files with a specified schema, it is possible that the data in the files does not match the schema. For example, a field containing the name of the city will not parse as an integer. The consequences depend on the mode that the parser runs in:

- PERMISSIVE (default): nulls are inserted for fields that could not be parsed correctly

- DROPMALFORMED: drops lines that contain fields that could not be parsed

- FAIL FAST: aborts the reading if any malformed data is found

JSON Files

You can read JSON files in single-line or multi-line mode. In single-line mode, a file can be split into many parts and read in parallel.

Multi-Line Mode

This JSON object occupies multiple lines:

[

{"string":"string1","int":1,"array":[1,2,3],"dict": {"key": "value1"}},

{"string":"string2","int":2,"array":[2,4,6],"dict": {"key": "value2"}},

{

"string": "string3",

"int": 3,

"array": [

3,

6,

9

],

"dict": {

"key": "value3",

"extra_key": "extra_value3"

}

}

]

Single-Line Mode

Single-line mode In this example, there is one JSON object per line: {"string":"string1","int":1,"array":[1,2,3],"dict": {"key": "value1"}} {"string":"string2","int":2,"array":[2,4,6],"dict": {"key": "value2"}} {"string":"string3","int":3,"array":[3,6,9],"dict": {"key": "value3", "extra_key": "extra_value3"}}

Parquet Files

Apache Parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than CSV or JSON.

Table Batch Read and Writes

Delta Lake supports most of the options provided by Apache Spark DataFrame read and write APIs for performing batch reads and writes on tables.

1.) Read a Table

You can load a Delta table as a DataFrame by specifying a table name or a path:

spark.table("default.people10m") # query table in the metastore

spark.read.format("delta").load("/tmp/delta/people10m") # query table by path

2.) Write to a Table

To atomically add new data to an existing Delta table, use append mode

df.write.format("delta").mode("append").save("/tmp/delta/people10m")

df.write.format("delta").mode("append").saveAsTable("default.people10m")

Perform Read and Write Operation In Azure Databricks

By the below step we can perform the Read and write operation in azure data bricks.

1. Provision of The Resources Required

1. From the Azure portal provision Azure Databricks Workspace, select Create a resource → Analytics → Databricks. Enter the required details and Click on Review+Create.

2. Create a Spark Cluster

1. Open the Azure Databricks Workspace and click on the New Cluster.

5. The Country sales data file is uploaded to the DBFS and ready to use.

6. Click on the DBFS tab to see the uploaded file and the Filestrore path.

6. Click on the DBFS tab to see the uploaded file and the Filestrore path.

3. Read and Write The Data

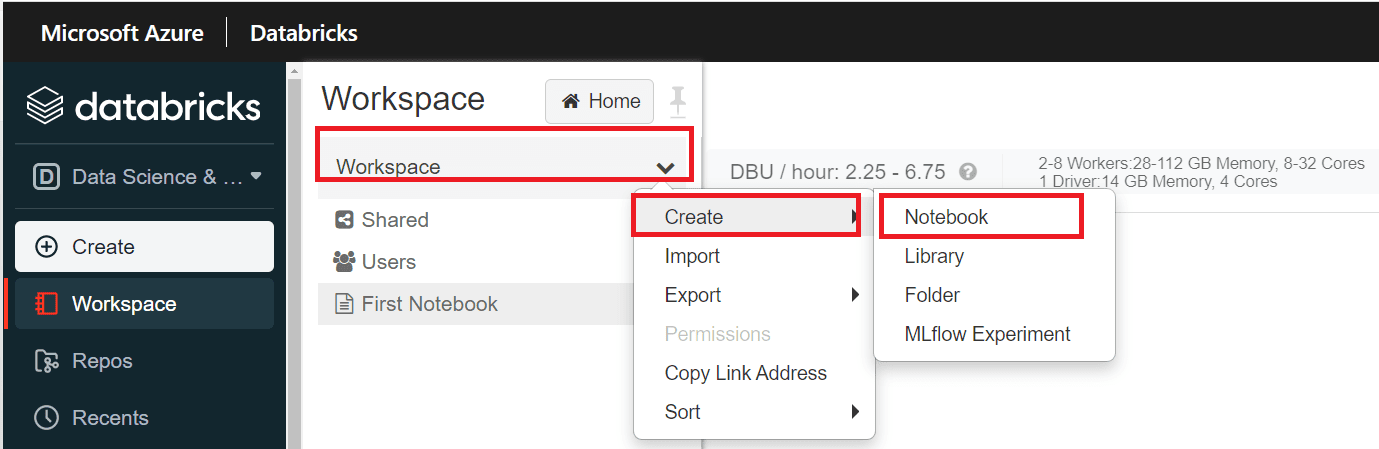

1. Open the Azure data bricks workspace and create a notebook.

2. Now its time to write some python code to read the ‘CountrySales.csv’ file and create a data frame.

# File location and type file_location = “/FileStore/tables/Country_Sales_Records.csv” file_type = “csv” # CSV options infer_schema = “false” first_row_is_header = “false” delimiter = “,” # The applied options are for CSV files. For other file types, these will be ignored. df = spark.read.format(file_type) \ .option(“inferSchema”, infer_schema) \ .option(“header”, first_row_is_header) \ .option(“sep”, delimiter) \ .load(file_location) display(df) Copy and Paste the above code in the cell, change the file name to your file name and make sure the cluster is running and attached to the notebook

3. Run it by clicking on the Run Cell or CTRL + ENTER. The code was executed successfully and I see the cluster created 2 spark jobs to read and display the data from the ‘Country Sale’ data file. Also if you notice the schema is not exactly right, it shows String for all the columns, and the Header doesn’t seem right ( _c0,_c1..etc).

4. Create a Table and Query The Data Using SQL

1. Create a temporary view using the data frame and query the data using SQL language.

2. Add a new cell to the notebook, paste the above code and then run the cell

# Create a view or table tblCountrtySales = “Country_Sales” df.createOrReplaceTempView(tblCountrtySales)

%sql select * from `Country_Sales`

Now you can use the regular SQL scripting language on top of the temporary view and query the data in whatever way you want. But the view is temporary in nature, which means it will only be available to this particular notebook and will not be available once the cluster restarts.

Created a new notebook and tried to access the view that we just created, but it’s not accessible from this notebook

tbl_name = “tbl_Country_Sales” # df.write.format(“parquet”).saveAsTable(tbl_name)

Now the permanent table is created and it will persist across cluster restarts as well as allow various users across different notebooks to query this data. we can access the table from other notebooks as well.

Related/References

- Microsoft Certified Azure Data Engineer Associate | DP 203 | Step By Step Activity Guides (Hands-On Labs)

- Exam DP-203: Data Engineering on Microsoft Azure

- Microsoft Azure Data Engineer Associate [DP-203] Interview Questions

- Azure Data Lake For Beginners: All you Need To Know

- Batch Processing Vs Stream Processing: All you Need To Know

Next Task For You

In our Azure Data Engineer training program, we will cover all the exam objectives, 27 Hands-On Labs, and practice tests. If you want to begin your journey towards becoming a Microsoft Certified: Azure Data Engineer Associate check our FREE CLASS.

The post Reading and Writing Data In DataBricks appeared first on Cloud Training Program.