Kubernetes cluster is used to deploy a containerized application on Cloud. One of the ways to deploy a containerized application in the form of Kubernetes Cluster on OCI is Container Engine for Kubernetes.

Kubernetes Adoption in Cloud:

- AWS – Elastic Kubernetes Service (EKS)

- Microsoft Azure – Azure Kubernetes Service (AKS)

- Google Cloud Platform – Google Kubernetes Engine (GKE)

- Oracle Cloud – Oracle Kubernetes Engine (OKE)

- Digital Ocean – Digital Ocean Kubernetes Service (DOKS)

Key Concepts Of Kubernetes

1) Kubernetes cluster: Is a group of nodes and nodes are the machine that runs the application. A node can be a physical machine or virtual machine.

2) Container: A container is a run time instance of a docker image that contains three things docker image, execution environment, and a standard set of instructions. There are different types of containers like Linux Container, Rocket, Mesos Containers, and Docker.

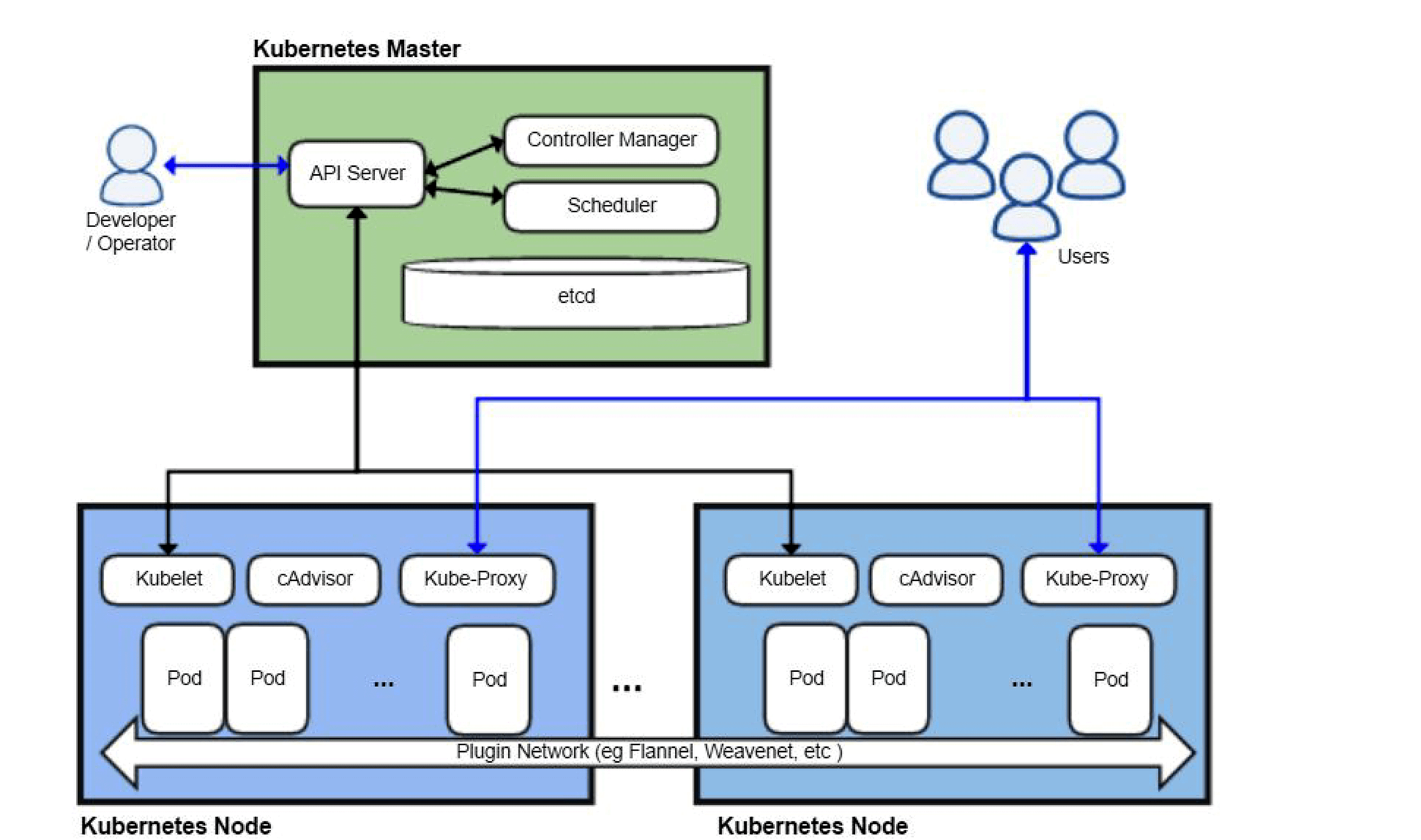

3) Type of nodes and their processes

- Master Node:

- kube-apiserver: supports API operations using Kubernetes Command Line tool (kubctl)

- kube-controller-manager: to manage kubernetes components (like: replication controller, endpoint controller, namespace controller)

- kube-scheduler: where in the cluster to run jobs

- etcd: store clusters configuration data

- Worker Node

- kubelet: to communicate with master node

- kube-proxy: handles networking

4) Pods: A worker node comprises various containers, Kubernetes groups the containers in a single logical unit called pods. Pods specify the process running in the Cluster. Similar functioning pods can be grouped together called a service.

5) Manifest Files/ Pod specs: These files are in json or yaml format that specifies how to deploy applications on node or nodes.

6) Node pools: It enables to create pools of machines in a cluster with different configurations like one Node pool for virtual machines and one for bare-metal machines. A cluster must have at least one node pool and it need not contain any worker node.

To read more about Container Engine for Kubernetes click here.

Ways To Launch Kubernetes On Oracle

There are basically three methods to run Kubernetes on OCI

- Roll-your-own Container Management: Using OCI component to create a Kubernetes Cluster and deploying container runtime like Docker, Kubernetes, Mesos. (Do it yourself model)

- Quickstart Experience: Automated model with terraform to build components of Kubernetes cluster (terrform on github).

- Container Engine for Kubernetes: OKE is the manageable service in OCI used for deploying Kubernetes cluster within a few steps.

Container Engine for Kubernetes is a highly available and manageable service in Oracle Cloud Infrastructure (OCI). It is used to deploy Cloud Native applications in OCI. We can create an application using Docker Containers and then deploy them on OCI using Kubernetes. Kubernetes groups the application containers into logical units called pods. We can manage the Container Engine for Kubernetes using API or Console.

We can create by default three clusters (Monthly flex costing model) or one cluster (pay as you go costing model). In each cluster, we can have a maximum of 1000 nodes, a maximum of 110 pods can run on each node.

Why Use Kubernetes

- Traditional deployment was costlier as we need to have a different physical server for the deployment of each application otherwise if we deploy multiple applications on each server than there will be a problem of resource allocation to each application.

- Virtualized Deployment is the solution to the above problem, in this, we can host multiple virtual machines on a physical server & will provide isolation to the applications deployed on each Virtual Machine. It also provides better utilization of resources.

- Container Deployment is similar to VMs but shares the OS among different applications, therefore, it is considered as lightweight. Containers are a good way to integrate and run your applications. For eg: if a container goes down then another container should start working and this process should be handled by a system.

Access Kubernetes On Oracle Cloud

1) Creating a Kubernetes Cluster: We can create a kubernetes cluster in OCI using various methods like

- Using console (quick cluster)

- Custom setting in console (custom cluster)

- Using API

To know about the steps to create the Kubernetes cluster click here.

2) Modify: We can modify the already created cluster node details.

3) Delete Cluster: We can delete cluster when it is of no use along with master node, worker nodes, and node pools.

4) Monitoring Cluster: We can monitor the cluster and associated nodes for its overall status. Cluster status can be creating, active, failed, deleting, deleted, and updating.

5) Accessing Cluster: We can use kubctl command-line tool to access and perform operations on the cluster.

Benefits Of Using Oracle Kubernetes (OKE)

- Easy to build & maintain applications and economics.

- Easy to integrate Kubernetes with registry using OKE.

- Feasible for developers to deploy and manage container applications on cloud.

- Combine open-source container orchestration of Kubernetes with oracle services like control, IAM, security, etc.

Reference/Related Post

- [Video] Containers (Docker) & Kubernetes In Azure For Beginners

- Kubernetes Architecture & Components Overview For Beginners

- Docker Image And Layer Overview For Beginners

- Docker Networking & Different Types Of Networking For Beginners

- Networking & Type Documentation on Docker

Join FREE Masterclass

To know about what is the Roles and Responsibilities of Kubernetes administrator, why you should learn Docker and Kubernetes, Job opportunities for Kubernetes administrator in the market, and what to study Including Hands-On labs you must perform to clear CKA certification Exam by registering for our FREE Masterclass.

Click on the below image to Register Our FREE Masterclass Now!

The post Container Engine For Kubernetes (OKE) In Oracle Cloud (OCI) appeared first on Oracle Trainings.

Oracle’s Configure, Price, and Quote (CPQ) provides a cloud-based system that offers extreme ease of use and configurability. It automates the sales order process and allows sales personnel to configure and price complex products and promotions.

Oracle’s Configure, Price, and Quote (CPQ) provides a cloud-based system that offers extreme ease of use and configurability. It automates the sales order process and allows sales personnel to configure and price complex products and promotions.

Oracle

Oracle

Pre-requisite For 1Z0-1088-20

Pre-requisite For 1Z0-1088-20