Recurrent neural networks (RNN) are a part of a larger institution of algorithms referred to as sequence models. Sequence models made giant leaps forward within the fields of speech recognition, tune technology, DNA series evaluation, gadget translation, and plenty of extras.

In this blog, we are going to cover:

- What are Recurrent Neural Networks (RNN)?

- Input and Output Sequences of RNN

- Training Recurrent Neural Networks (RNN)

- Long Short-Term Memory (LSTM)

- Advantages of RNN’s

- Disadvantages of RNN’s

- Applications of RNN’s

- Conclusion

What Are Recurrent Neural Networks (RNN)?

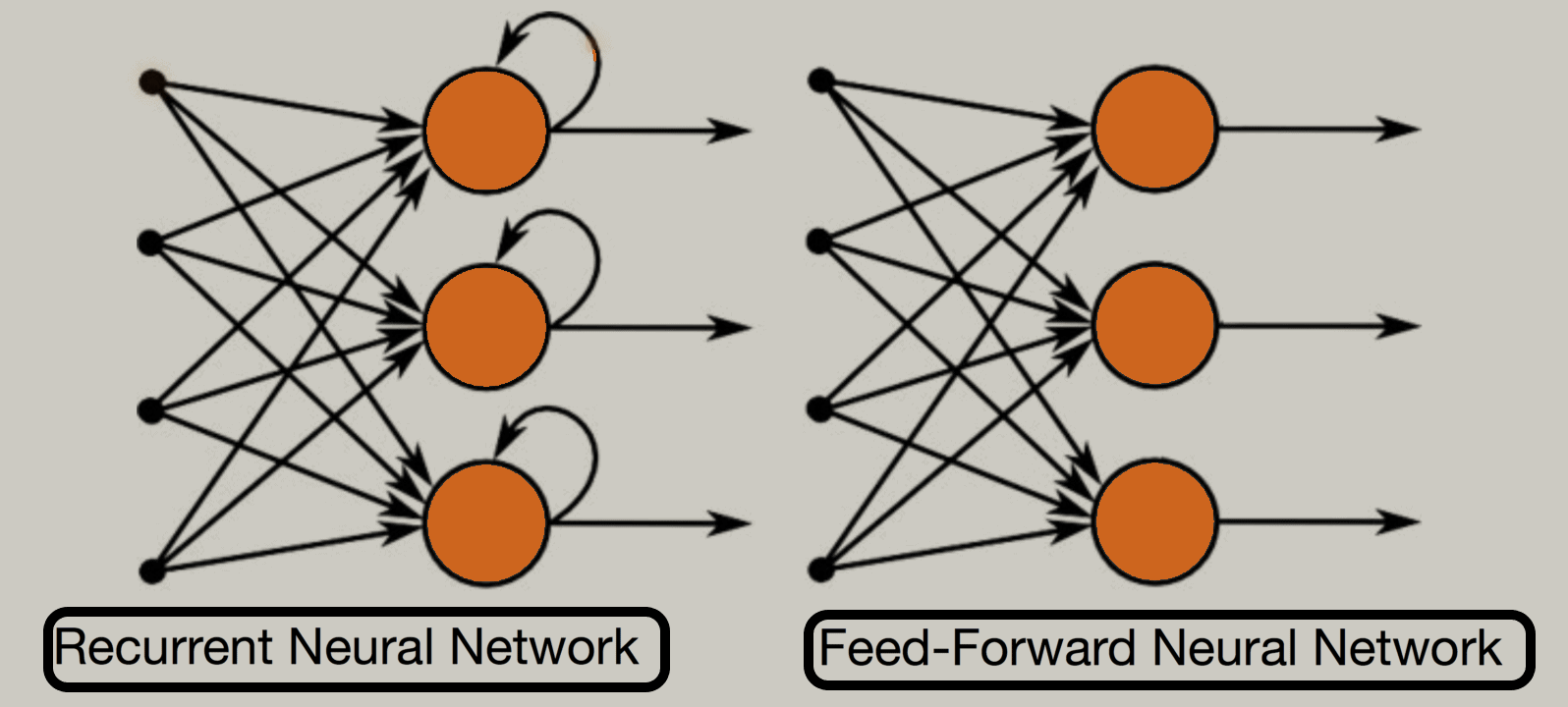

- RNN recalls the past and its selections are motivated with the aid of what it has learned from the past.

- Simple feed ahead networks “don’t forget” things too, however they consider things they learned at some stage in training.

- A recurrent neural network appears very just like feedforward neural networks, except it also has connections pointing backward.

- At each time step t (additionally called a frame), the RNN’s gets the inputs x(t) in addition to its personal output from the preceding time step, y(t–1). In view that there is no previous output at the primary time step, it’s far usually set to 0.

- Without difficulty, you can create a layer of recurrent neurons. At whenever step t, every neuron gets the entering vector x(t) and the output vector from the previous time step y(t–1).

Input And Output Sequences Of RNN

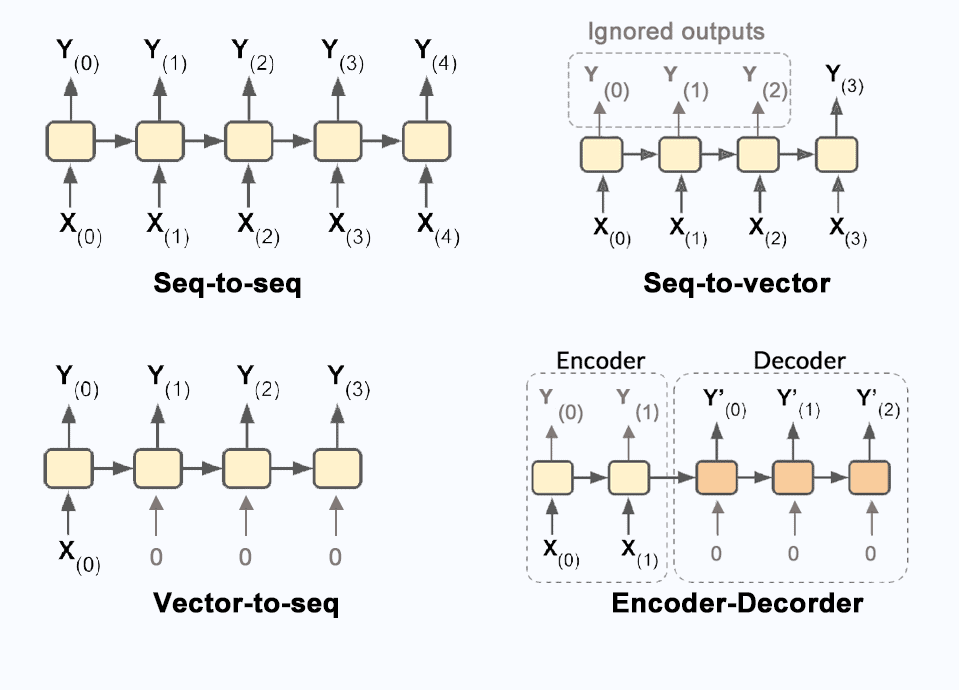

- An RNN can concurrently take a series of inputs and produce a series of outputs.

- This form of sequence-to-sequence network is useful for predicting time collection which includes stock prices: you feed it the costs during the last N days, and it ought to output the fees shifted by means of sooner or later into the future.

- You may feed the network a series of inputs and forget about all outputs besides for the final one, words, that is a sequence-to-vector network.

- You could feed the network the equal input vector again and again once more at whenever step and allow it to output a sequence, that is a vector-to-sequence network.

- You can have a sequence-to-vector network, referred to as an encoder, followed by a vector-to-sequence network, called a decoder.

Training Recurrent Neural Networks (RNN)

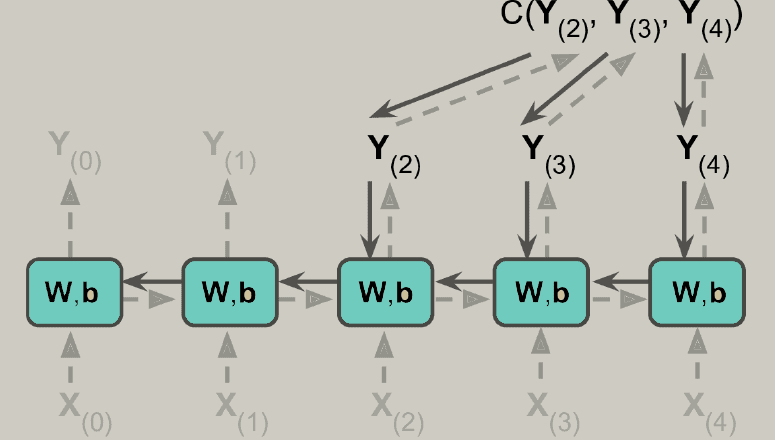

- To train an RNN, the trick is to unroll it through time and then actually use regular backpropagation. This strategy is known as backpropagation through time (BPTT).

- There’s a first forward pass via the unrolled network. Then the output sequence is evaluated with the use of a cost function C.

- The gradients of that cost feature are then propagated backward via the unrolled network.

- Now the model parameters have updated the use of the gradients computed all through BPTT.

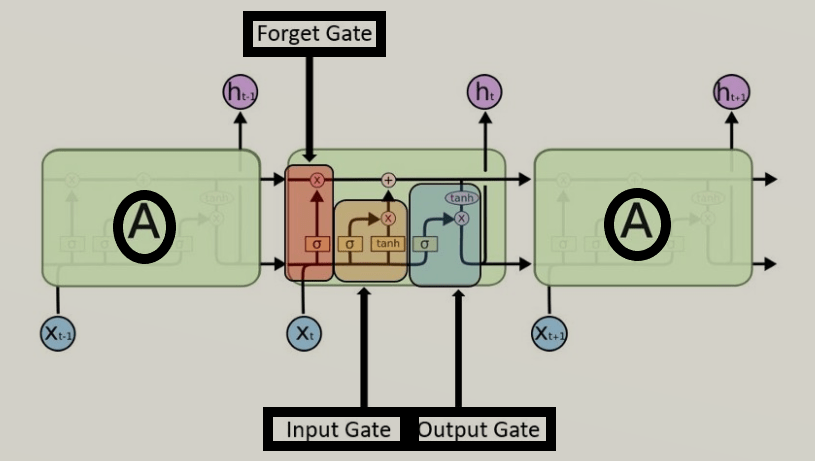

Long Short-Term Memory (LSTM)

- A unique kind of Recurrent Neural Networks, capable of learning lengthy-time period dependencies.

- LSTM’s have a Nature of Remembering facts for a long interval of time is their Default behavior.

- Each LSTM module may have three gates named as forget gate, input gate, output gate.

- Forget Gate: This gate makes a decision which facts to be disregarded from the cellular in that unique timestamp. it’s far determined via the sigmoid function.

- Input gate: makes a decision how plenty of this unit is introduced to the current state. The sigmoid function makes a decision which values to permit through 0,1. and Tanh function gives weightage to the values which might be handed figuring out their level of importance ranging from-1 to at least one.

- Output Gate: comes to a decision which a part of the current cell makes it to the output. Sigmoid characteristic decides which values to permit thru zero,1. and Tanh characteristic gives weightage to the values which can be exceeded determining their degree of importance ranging from-1 to at least one and expanded with an output of Sigmoid.

Advantages Of RNN’s

- The principal advantage of RNN over ANN is that RNN can model a collection of records (i.e. time collection) so that each pattern can be assumed to be dependent on previous ones.

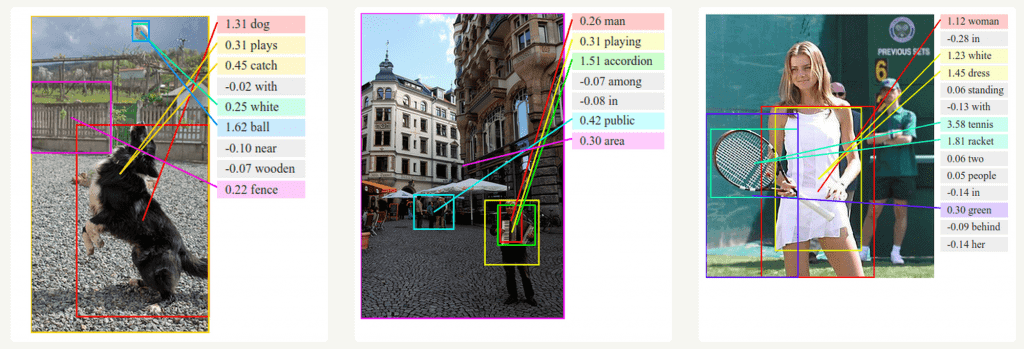

- Recurrent neural networks are even used with convolutional layers to extend the powerful pixel neighborhood.

Disadvantages Of RNN’s

- Gradient exploding and vanishing problems.

- Training an RNN is a completely tough task.

- It cannot system very lengthy sequences if the usage of Tanh or Relu as an activation feature.

Applications Of RNN’s

- Text Generation

- Machine Translation

- Visual Search, Face detection, OCR

- Speech recognition

- Semantic Search

- Sentiment Analysis

- Anomaly Detection

- Stock Price Forecasting

Conclusion

- Recurrent Neural Networks stand at the foundation of the modern-day marvels of synthetic intelligence. They provide stable foundations for synthetic intelligence programs to be greater green, flexible of their accessibility, and most importantly, extra convenient to use.

- However, the outcomes of recurrent neural network work show the actual cost of the information in this day and age. They display what number of things may be extracted out of records and what this information can create in return. And that is exceptionally inspiring.

Next Task For You

Begin your journey towards Introduction To Data Science and Machine Learning by joining our FREE Informative Class on Introduction To Data Science and Machine Learning by clicking on the below image.

The post Introduction to Recurrent Neural Networks (RNN) appeared first on Cloud Training Program.