A Decision tree is a support tool with a tree-like structure that models probable outcomes, the value of resources, utilities, and doable consequences. decision trees give the way to gift algorithms with conditional management statements. They include branches that represent decision-making steps that will result in a good result.

This blog post covers the following points:

1. Decision Tree

2. Why use Decision Tree

3. Decision Tree Terminologies

4. How Decision Tree Algorithm works

5. Advantages

6. Disadvantages

7. Real-Life Example

At the end of this blog, you will be confident enough to identify the areas where you can use the Decision Tree algorithm. So, Let’s get started!

Decision Tree

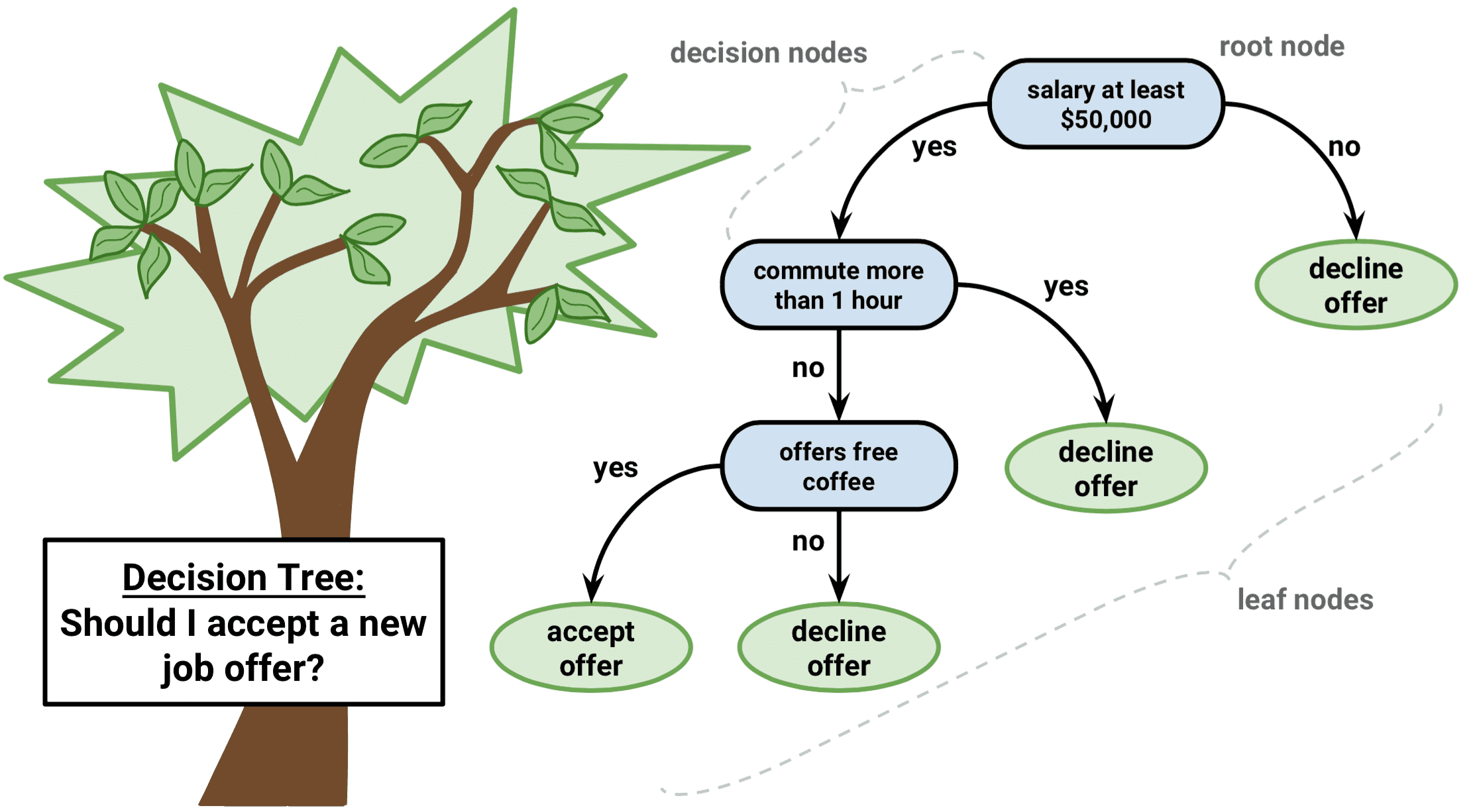

- Decision Tree is a kind of supervised learning technique that may be used for each classification and Regression issue, however principally it’s most popular for determination Classification issues. it’s a tree-structured classifier, wherever internal nodes represent the options of a dataset, branches represent the selection rules and each leaf node represents the result.

- There are 2 nodes, which are called the Decision node and Leaf Node. The Decision node is used to make decisions and also the Leaf node is that the output.

- It is a graphical illustration for obtaining all the attainable solutions to a tangle supported given conditions.

- It is known as a selection tree as a result of its near sort of a tree, it starts with the fundamental node, that expands on additional branches and constructs a tree-like structure.

- To build a tree, we have a tendency to use the CART formula, which stands for Classification and Regression Tree formula.

![decision]() Why use Decision Trees?

Why use Decision Trees?

There are many algorithms in Machine learning, thus selecting the most effective formula for the given dataset and the downside is that the main purpose to recollect whereas making a machine learning model. Below are the 2 reasons for the exploitation of the Decision tree:

- Decision Trees typically mimic human thinking ability whereas creating a call, thus it’s straightforward to grasp.

- The logic behind the Decision tree is often simply understood as a result of it shows a tree-like structure.

Decision Tree Terminologies

- Root Node: the foundation node is wherever the choice tree starts. It represents the whole dataset, that additional gets divided into 2 or a lot of homogenized sets.

- Leaf Node: Leaf nodes are the end output node.

- Splitting: rending is the method of dividing the choice node or root node into sub-nodes.

- Branch/Sub Tree: A tree fashioned by rending the tree.

- Pruning: Pruning is the method of removing the unwanted branches from the tree.

- Parent/Child node: The root node of the tree is named the parent node, and different nodes are known as the kid nodes.

How The Decision Tree Algorithm Work?

In a call tree, for predicting the category of the given dataset, the algorithmic rule starts from the foundation node of the tree. This algorithmic rule compares the values of the root attribute with the record (real dataset) attribute and, supported the comparison, follows the branch, and jumps to the successive node.

For successive node, the algorithmic rule once more compares the attribute worth with the opposite sub-nodes and move more. It continues the method till it reaches the leaf node of the tree. the entire method may be higher understood victimization the below algorithm:

- Begin the tree with the foundation node, says S, that contains the entire dataset.

- Notice the simplest attribute within the dataset victimization Attribute choice measure (ASM).

- Divide the S into subsets that contain attainable values for the simplest attributes.

- Generate the choice tree node, that contains the simplest attribute.

- Recursively create new call trees victimization the subsets of the dataset created in step -3. Continue this method till a stage is reached wherever you can’t more classify the nodes and known as the ultimate node as a leaf node.

Advantages

- It is easy to grasp because it follows a constant method that somebody follows whereas creating any call-in real-life.

- It is terribly helpful for the resolution of decision-related issues.

- It helps to place confidence in all the attainable outcomes for a haul.

- There is less demand for knowledge cleansing compared to alternative algorithms.

Disadvantages

- The decision tree contains legion layers, which makes it advanced.

- It may have an associate overfitting issue, which might be resolved exploitation the Random Forest formula.

- For a lot of category labels, the process quality of the choice tree could increase.

Real Example Of Decision Tree

- Selecting a flight to travel

Suppose you wish to pick out a flight for your next travel. however, will we act it? we tend to check 1st if the flight is offered thereon day or not. If it’s not offered, we’ll rummage around for another date however if it’s offered then we glance for could also be the length of the flight. If we would like to possess solely direct flights then we glance at whether or not the value of that flight is in your pre-defined budget or not. If it’s too expensive, we glance at other flights else we tend to book it! - Handling late-night cravings:

Conclusion:

So, that’s all. This is an overview of the Decision tree algorithm and its application in real-world scenarios. Next up we have the Random Forest algorithm that is an extension of the decision tree algorithm.

Next Task For You

Begin your journey towards Introduction To Data Science and Machine Learning by joining our FREE Informative Class on Introduction To Data Science and Machine Learning by clicking on the below image.

The post Introduction To Decision Tree Algorithm appeared first on Cloud Training Program.

Why use Decision Trees?

Why use Decision Trees?