Welcome to our AWS GenAI/ML Mastery Bootcamp! In this post, we’ll cover everything you need to master AWS GenAI/ML, secure a high-paying job, and get certified (AIF-C01, MLA-Co1, MLS-C01) in this dynamic field. Whether you’re starting with the basics or looking to deepen your expertise, we’ve got you covered with a comprehensive approach to AWS Generative AI and ML.

We’ll dive into the essentials of AI, ML & Gen AI and explore advanced topics through detailed hands-on labs and project work. Our step-by-step activity guides and real-world projects will provide you with the practical skills and knowledge needed to excel in interviews and advance your career.

Prepare to immerse yourself in hands-on learning experiences that will pave the way for your success in the AWS AI/ML domain. From foundational concepts to advanced techniques, this boot camp will equip you with everything you need to thrive in this ever-evolving field. Let’s get started on this transformative journey together!

Table of Contents:

1. Hands-On Labs For AWS AI/ML

- Lab 1: Create an AWS Free Trial Account

- Lab 2: CloudWatch – Create Billing Alarm & Service Limits

- Lab 3: Exploring AWS AI Services with Amazon Comprehend, Translate, Transcribe, and Textract

- Lab 4: Enhancing Clinical Documentation with Amazon Comprehend Medical & Transcribe Medical

- Lab 5: Create, Deploy & Manage Amazon Q Business and Amazon Q Apps

- Lab 6: Analyze Insights in Text with Amazon Comprehend

- Lab 7: Setting Up Image Classification Labeling in SageMaker Ground Truth

- Lab 8: Setting Up Jupyter Notebook Environment in SageMaker Studio

- Lab 9: Create & Manage SageMaker Studio: Deploy & Test SageMaker JumpStart Foundation Models

- Lab 10: Build a Sample Chatbot Using Amazon Lex

- Lab 11: Build Lex Chatbot Using 3rd Party API

1.3 Gen AI, FM Applications & AWS Bedrock

- Lab 12: How To Request Access to Bedrock Foundation Models on AWS Account

- Lab 13: Generating Images in Bedrock with Amazon Titan Image Generator G1

- Lab 14: Crafting Prompts and Summarizing Text in Bedrock with Amazon Titan Text G1 Express & Anthropic Claude

- Lab 15: Invoke Bedrock Model for Text Generation Using Advanced Prompts Techniques: Zero-Shot, One-Shot, Few-Shot, and Chain of Thought

- Lab 16: Building a RAG-Enhanced Knowledge Management System With Amazon Bedrock Using Knowledge Bases

- Lab 17: Setting Up and Managing Guardrails with Amazon Bedrock Foundation Models

- Lab 18: Watermark Detection with Amazon Bedrock

1.4 Building GenAI Applications with Bedrock

- Lab 19: Requesting AWS Service Quota Increases For EC2 Instance

- Lab 20: Invoke Zero Shot Prompt for Text Generation in Bedrock Using SageMaker Studio

- Lab 21: Automating Python Code Generation with Amazon Bedrock’s Claude Model Using SageMaker

- Lab 22: Build a Bedrock Agent with Action Groups, Knowledge Bases, and Guardrails

- Lab 23: Text and Vector Embedding and Similarity Testing Using Amazon Titan in SageMaker Studio

- Lab 24: Exploring Transformers: Tokenization, Self-Attention, and Text Generation with BERT and GPT-2

- Lab 25: Perform Text Generation

- Lab 26: Perform Text Generation Using a Prompt That Includes Context

- Lab 27: Text Summarization with Small Files with Titan Text Premier

- Lab 28: Abstractive Text Summarization (Need to check for this as similar is there in Gen AI)

- Lab 29: Use Amazon Bedrock for Question Answering

- Lab 30: Conversational Interface – Chat with Llama 3 and Titan Premier LLMs

- Lab 31: Invoke Bedrock Model for Code Generation

- Lab 32: Bedrock Model Integration with Langchain Agents

- Lab 33: Create S3 Bucket Upload Accessing Files Hosting Website

- Lab 34: S3 Cross Region Replication

- Lab 35: S3 Lifecycle Management on S3 Bucket

- Lab 36: Creating and Subscribing to SNS Topics, Adding SNS Event for S3 Bucket

1.6 Data Integrity, Transformation, Feature Engineering

- Lab 37: Preparing Data for TF-IDF with PySpark and EMR

- Lab 38: Exploring AWS Glue DataBrew: Data Preprocessing Without Code

- Lab 39: Creating and Running AWS Glue ETL Jobs for Data Transformation

- Lab 40: Discover Sensitive Data Present in S3 Bucket Using Amazon Macie

- Lab 41: Streamlining Data Analysis with No-Code Tools: SageMaker Canvas and Data Wrangler

- Lab 42: Tuning, Deploying, and Predicting with TensorFlow on SageMaker

- Lab 43: Hyperparameter Optimization Using SageMaker Amazon SageMaker Automatic Model Tuning (AMT)

- Lab 44: Data Preparation and Automated Model Training with SageMaker Data Wrangler & Autopilot

1.9 Model Deployment and Monitoring with SageMaker

- Lab 45: Build, Train, and Deploy Machine Learning Model With Amazon SageMaker

- Lab 46: Low-Code Building, Training, and Deploying Models with SageMaker AI Canvas

- Lab 47: Create ECR Docker Image Push Images To ECR

- Lab 48: Create EKS Cluster Managed Kubernetes on AWS

- Lab 49: Create and Update Stacks Using CloudFormation

- Lab 50: AWS Serverless Application Model (SAM) Build Serverless Apps

- Lab 51: Automate Start/Stop EC2 Instance Using Lambda

- Lab 52: Build & Deploy ML Using CodePipeline (Kept on hold facing errors)

1.11 Security, Compliance & Governance for AI Solutions

- Lab 53: Get Started with AWS X-Ray

- Lab 54: Enable CloudTrail and Store Logs in S3

- Lab 55: Setting Up AWS Config

1.12 Deep Learning and Advanced Modeling

-

Lab 56: Train a Deep Learning Model with AWS Containers

- Project 1: Build a RAG Assistant

- Project 2: Build Advanced Crypto AI Agents on Amazon Bedrock

- Project 3: Image Semantic Segmentation using AWS SageMaker

- Project 4: Predict University Admission with SageMaker Autopilot

- Project 5: Credit Card Fraud Detection Using Amazon SageMaker

- Project 6: Real-Time Stock Data Processing

- Project 7: Building an End-to-End ML Pipeline using SageMaker

- Project 8: Housing Price Prediction with SageMaker Autopilot

- Project 9: Predicting Customer Churn using ML Model

- Project 10: MLOps Pipeline in Amazon SageMaker

- AWS Certified Cloud Practitioner (CLF-C02)

- AWS AI Practitioner (AIF-C01)

- AWS Machine Learning Associate (MLA-C01)

- AWS Machine Learning Speciality (MLS-C01)

1. Hands-On Labs For AWS AI/ML

1.1 AWS for Beginners

Lab 1: Create an AWS Free Trial Account

Embark on your AWS journey by setting up a free trial account. This hands-on lab guides you through the initial steps of creating an AWS account, giving you access to a plethora of cloud services to experiment and build with.

Amazon Web Services (AWS) is providing a free trial account for 12 months to new subscribers to get hands-on experience with all the services that AWS provides. Amazon is giving us a number of different services that we can use, with some limitations, to get hands-on practice and gain more knowledge on AWS Cloud services as well as regular business use.

With the AWS Free Tier account, all the services offered have limited usage limits on what we can use without being charged. Here, we will look at how to register for an AWS FREE Tier Account.

Related Readings: How To Create AWS Free Tier Account

Lab 2: CloudWatch – Create Billing Alarm & Service Limits

Dive into CloudWatch, AWS’s monitoring service. This lab focuses on setting up billing alarms to manage costs effectively and keeping an eye on service limits to ensure your applications run smoothly within defined boundaries.

AWS billing notifications can be enabled using Amazon CloudWatch. CloudWatch is an Amazon Web Services service that monitors all of your AWS account activity. CloudWatch, in addition to billing notifications, provides infrastructure for monitoring apps, logs, metrics collection, and other service metadata, as well as detecting activity in your AWS account usage.

AWS CloudWatch offers a number of metrics through which you can set your alarms. For example, you may set an alarm to warn you when a running instance’s CPU or memory utilisation exceeds 90% or when the invoice amount exceeds $100. We get 10 alarms and 1,000 email notifications each month with an AWS free tier account.

Related Readings: CloudWatch vs. CloudTrail: Comparison, Working & Benefits

1.2 AWS AI & ML Services

Lab 3: Exploring AWS AI Services with Amazon Comprehend, Translate, Transcribe, and Textract

Objective: Gain hands-on experience with AWS AI services, focusing on Amazon Comprehend, Translate, Transcribe, and Textract.

This lab guides you through analyzing text for insights, translating text between languages, transcribing audio to text, and extracting structured data from documents. You’ll start by setting up the necessary AWS environment and creating an S3 bucket for data storage. Then, you’ll explore each service, performing tasks such as text sentiment analysis, text translation, audio transcription, and data extraction from scanned documents.

By the end of this lab, you’ll have a comprehensive understanding of how to integrate these AI capabilities into your data workflows, enhancing automation and efficiency in your projects.

Related Readings: AWS AI, ML, and Generative AI Services and Tools

Lab 4: Enhancing Clinical Documentation with Amazon Comprehend Medical & Transcribe Medical

Objective: Gain hands-on experience with Amazon Comprehend Medical and Amazon Transcribe Medical for analyzing clinical text and transcribing medical audio.

This lab guides you through using Amazon Comprehend Medical to analyze clinical text for insights and Amazon Transcribe Medical to transcribe medical conversations into text. You’ll start by setting up the necessary AWS environment and creating S3 buckets for data storage. Then, you’ll explore each service to perform tasks such as clinical text analysis and medical transcription.

By the end of this lab, you’ll have a comprehensive understanding of how to integrate these AI capabilities into your data workflows, enhancing automation and efficiency in your clinical projects.

Related Readings: What is AWS Comprehend: Natural Language Processing in AWS

Lab 5: Create, Deploy & Manage Amazon Q Business and Amazon Q Apps

Objective: Gain hands-on experience with Amazon Q Business and Amazon Q Apps by creating, managing, and querying a knowledge base to optimize knowledge management.

This lab guides you through the process of integrating data sources like Amazon S3, configuring advanced retrieval models, and setting up secure user roles using the IAM Identity Center. You’ll also explore Amazon Q Apps to automate tasks and generate actionable insights, improving both internal operations and customer support.

By the end of this lab, you’ll have a comprehensive understanding of how to leverage Amazon Q Business and Amazon Q Apps to enhance knowledge management and operational efficiency.

Related Readings: Amazon Q: Boosting Productivity with Advanced AI Assistance

Lab 6: Analyze Insights in Text With Amazon Comprehend

Objective: To utilize Amazon Comprehend for analyzing customer feedback, performing sentiment analysis, and deriving actionable insights to enhance customer satisfaction and product offerings.

Related Readings: What is AWS Comprehend: Natural Language Processing in AWS

Lab 7: Setting Up Image Classification Labeling in SageMaker Ground Truth

Objective: Learn to create and label datasets for image classification using Amazon SageMaker Ground Truth.

This lab introduces you to Amazon SageMaker Ground Truth, a service that helps you build high-quality training datasets for machine learning. You will set up a labeling workflow for image classification tasks, creating custom labeling jobs and integrating human workers for labeling the dataset.

By the end of this lab, you’ll be proficient in using SageMaker Ground Truth to generate labeled datasets, essential for training accurate image classification models.

Lab 8: Setting Up Jupyter Notebook Environment in SageMaker Studio

Objective: Set up the Jupyter Notebook environment in SageMaker Studio for model development.

Overview:

This lab will guide you through setting up a Jupyter Notebook environment within SageMaker Studio. You’ll learn how to configure the environment, import necessary libraries, and prepare for building and testing machine learning models. By the end of this lab, you’ll be equipped to use Jupyter Notebooks for data exploration, model training, and evaluation.

Related Readings: Amazon SageMaker AI For Machine Learning: Overview & Capabilities

Lab 9: Create & Manage SageMaker Studio: Deploy & Test SageMaker JumpStart Foundation Models

Objective: Learn how to create and manage SageMaker Studio, utilize the SageMaker JumpStart Foundation Model Hub, and deploy and test pre-built machine learning models.

This lab provides practical experience in setting up a SageMaker Studio environment, deploying a model from SageMaker JumpStart, and performing inference tests to validate the model’s performance. You’ll explore the process of creating and managing your SageMaker Studio, ensuring that you can effectively deploy and test machine learning models.

By the end of this lab, you’ll have a solid understanding of how to utilize SageMaker Studio and SageMaker JumpStart to deploy and validate machine learning models.

Related Readings: Amazon SageMaker AI For Machine Learning: Overview & Capabilities

Lab 10: Build a sample chatbot using Amazon Lex

Objective: To build a custom text-based chatbot using Amazon Lex that can understand and respond to user intents such as greetings and food orders. This lab guides you through creating a bot, defining intents with sample utterances and responses, building and testing the chatbot, and finally cleaning up resources. By the end, you will have hands-on experience designing conversational interfaces that improve customer interactions through automated dialogue handling.

![AWS ML Specialty: Step-by-Step Hands-On Guide 2025 by K21 Academy Build a sample chatbot using Amazon Lex]() Lab 11: Amazon Lex Chatbot Using 3rd Party API

Lab 11: Amazon Lex Chatbot Using 3rd Party API

Objective: To build a text-based chatbot using Amazon Lex integrated with a third-party REST API via AWS Lambda. This lab guides you through creating a Lambda function to fetch real-time country information, configuring intents and slots in Amazon Lex, linking the Lambda function for fulfillment, building and testing the chatbot, and cleaning up resources. By the end, you will have hands-on experience creating conversational AI that delivers dynamic, real-time responses by combining Lex’s natural language understanding with external data sources.

1.3 Gen AI, FM Applications & AWS Bedrock

Lab 12: How To Request Access to Bedrock Foundation Models on AWS Account

Objective: Learn how to enable and manage access to Foundation Models in Amazon Bedrock.

This lab guides you through accessing and managing Foundation Models within Amazon Bedrock on your AWS account. You’ll navigate the Bedrock console, request access to models from leading AI providers, and ensure that all required permissions are correctly configured.

By the end of this lab, you’ll have successfully enabled and managed access to Foundation Models on Amazon Bedrock, setting the stage for deploying generative AI applications.

Related Readings: Amazon Bedrock Explained: A Comprehensive Guide to Generative AI

Lab 13: Generating Images in Bedrock with Amazon Titan Image Generator G1

Objective: Learn how to navigate the Image Playground in Amazon Bedrock, select a model, and generate images based on custom prompts.

This lab guides you through using Amazon Bedrock to access the Image Playground, where you’ll select a model and generate images using custom prompts. You’ll explore the range of foundation models available in Bedrock, focusing on running inference to create images for generative AI applications.

By the end of this lab, you’ll have successfully generated images using Amazon Titan Image Generator G1, gaining practical experience in applying generative AI techniques.

Related Readings: Amazon Titan: A Foundation Model by AWS

Lab 14: Crafting Prompts and Summarizing Text in Bedrock with Amazon Titan Text G1 Express & Anthropic Claude

Objective: Learn how to use Amazon Titan Text G1 Express and Anthropic Claude V2 to craft effective prompts and summarize text.

This lab guides you through utilizing Amazon Titan Text G1 Express and Anthropic Claude V2 within Amazon Bedrock to perform high-quality text generation and summarization tasks. You’ll explore how these advanced language models can enhance your ability to create precise prompts and generate concise summaries.

By the end of this lab, you’ll have developed the skills to effectively leverage these models for various text generation and summarization applications.

Related Readings: What is Prompt Engineering?

Lab 15: Invoke Bedrock Model for Text Generation Using Advanced Prompting Techniques

Objective: Gain hands-on experience with advanced prompting techniques in Amazon Bedrock for text generation, including Zero-Shot, One-Shot, Few-Shot, and Chain of Thought.

This lab guides you through using Amazon Bedrock to generate text with advanced prompt techniques. You’ll start by setting up the necessary AWS environment. Then, you’ll explore different approaches to prompting the model:

-

Zero-Shot: Generate text without providing any prior example.

-

One-Shot: Provide a single example to guide the model’s output.

-

Few-Shot: Provide multiple examples to train the model for better results.

-

Chain of Thought: Guide the model through a series of logical steps to enhance reasoning and coherence.

By the end of this lab, you’ll have a comprehensive understanding of how to apply these advanced prompting techniques with Amazon Bedrock for more accurate, context-aware text generation, making your AI workflows more effective.

Lab 16: Building a RAG-Enhanced Knowledge Management System With Amazon Bedrock Using Knowledge Bases

Objective: Learn how to build and optimize a Knowledge Management System using Amazon Bedrock Knowledge Bases and Retrieval-Augmented Generation (RAG).

This lab provides hands-on experience with Amazon Bedrock Knowledge Bases and the integration of Retrieval-Augmented Generation (RAG) to enhance your knowledge management system. You’ll learn to build a robust knowledge base, integrate it with Amazon S3, and configure advanced embedding models for efficient information retrieval.

By the end of this lab, you’ll have created a scalable knowledge repository and enhanced it with RAG, improving the relevance and accuracy of responses.

Related Readings: Understanding RAG with LangChain

Lab 17: Setting Up and Managing Guardrails with Amazon Bedrock Foundation Models

Objective: Learn how to enable and manage access to Foundation Models in Amazon Bedrock.

This lab guides you through accessing and managing Foundation Models within Amazon Bedrock on your AWS account. You’ll navigate the Bedrock console, request access to models from leading AI providers, and ensure that all required permissions are correctly configured.

By the end of this lab, you’ll have successfully enabled and managed access to Foundation Models on Amazon Bedrock, setting the stage for deploying generative AI applications.

Lab 18: Watermark Detection with Amazon Bedrock

Objective: Learn how to detect watermarks embedded in media files, specifically in images generated by the Titan Image Generator G1 model in Amazon Bedrock.

This lab guides you through the process of using Amazon Bedrock to detect watermarks in images created by the Titan Image Generator G1 model. You’ll explore watermarking as a critical technique for content authentication and rights management.

By the end of this lab, you’ll be able to detect watermarks in images generated by the Titan Image Generator G1 and understand how to integrate this functionality into your applications. Note that this capability is designed exclusively for images produced by the Titan Image Generator G1 and does not apply to images from other sources or models.

Related Readings: Amazon Bedrock Explained: A Comprehensive Guide to Generative AI

1.4 Building GenAI Applications with Bedrock

Lab 19: Requesting AWS Service Quota Increases for EC2 Instance

Objective: Gain hands-on experience in requesting a quota increase for the ml.g5.2xlarge instance type within Amazon SageMaker using the AWS Management Console.

This lab guides you through the process of requesting a service quota increase for the ml.g5.2xlarge instance type. Managing service quotas effectively is crucial for ensuring that your cloud infrastructure can scale to meet the needs of your applications and clients.

By the end of this lab, you’ll understand how to navigate the AWS Management Console to manage and request quota increases, ensuring that your infrastructure can support growing workloads.

Related Readings: Amazon SageMaker AI For Machine Learning: Overview & Capabilities

Lab 20: Invoke Zero-Shot Prompt for Text Generation in Bedrock using SageMaker Studio

Objective: Learn how to use Amazon Bedrock’s Titan model to generate text based on zero-shot prompts, specifically for crafting email responses to negative customer feedback.

In this lab, you’ll set up your AWS environment, launch SageMaker Studio, and create a JupyterLab workspace. You’ll then utilize the Titan model in Amazon Bedrock to generate text using zero-shot prompts, focusing on crafting effective email responses to negative customer feedback. This hands-on experience will equip you with the skills to leverage large language models (LLMs) for various text-generation tasks in real-world applications.

By the end of this lab, you’ll be proficient in using zero-shot prompts for text generation, enabling you to apply LLMs to a range of text-based tasks.

Related Readings: Amazon Bedrock Explained: A Comprehensive Guide to Generative AI

Lab 21: Automating Python Code Generation with Amazon Bedrock’s Claude Model

Objective:

Learn how to automate Python code generation using Amazon Bedrock’s Claude model within SageMaker Studio.

In this lab, you’ll set up your environment in AWS, configure Boto3, and launch SageMaker Studio to create a Jupyter lab space. After making the necessary settings adjustments, you’ll use Amazon Bedrock’s Claude model to generate Python scripts for analyzing sales data from a CSV file. This hands-on experience will help you understand and utilize AI-driven code generation to streamline development processes and enhance efficiency.

By the end of this lab, you’ll have the skills to automate Python code generation and apply AI in data analysis tasks.

Related Readings: Amazon Bedrock Explained: A Comprehensive Guide to Generative AI

Lab 22: Build a Bedrock Agent with Action Groups, Knowledge Bases, and Guardrails

Objective: To build and deploy an intelligent Amazon Bedrock agent that integrates domain-specific knowledge bases, real-time data retrieval via AWS Lambda, and guardrails to ensure secure, relevant, and compliant responses. This lab enables hands-on experience in creating AI agents capable of answering self-employment questions and providing live weather updates, combining large language model capabilities with real-time action execution and safety controls.

Lab 22: Text and Vector Embedding and Similarity Testing Using Amazon Titan in SageMaker Studio

Objective: Learn how to generate text embeddings and perform similarity testing using Amazon Titan within SageMaker Studio.

In this lab, you’ll set up your environment in AWS, launch SageMaker Studio, and create a Jupyter lab space. After making the necessary settings adjustments, you’ll use Amazon Titan to generate text embeddings and perform similarity testing. This hands-on experience will help you build and utilize text embeddings to support sophisticated NLP applications.

By the end of this lab, you’ll have the skills to create and apply text embeddings in natural language processing tasks.

Related Readings: Amazon Titan: A Foundation Model by AWS

Lab 23: Exploring Transformers with BERT and GPT-2

Objective: Learn how to use Transformer models like BERT and GPT-2 for text generation tasks.

This lab guides you through understanding key concepts such as tokenization and self-attention in Transformer models.

By the end of this lab, you will have a deep understanding of Transformer-based architectures for natural language processing.

Lab 25: Perform Text Generation

Objective: Gain hands-on experience with Amazon Bedrock for basic text generation tasks.

This lab walks you through generating text using the foundational capabilities of Amazon Bedrock. You’ll set up your AWS environment, create the necessary tools, and start using the model to generate text for a variety of scenarios.

Lab 26: Perform Text Generation Using a Prompt That Includes Context

Objective: Learn how to provide context within prompts to enhance text generation.

In this lab, you will explore how including relevant context in your prompts can improve the quality and relevance of the generated text. By leveraging Amazon Bedrock’s contextual generation capabilities, you’ll understand the importance of prompt engineering.

Lab 27: Text Summarization with Small Files Using Titan Text Premier

Objective: Learn how to use Titan Text Premier for summarizing small text files.

This lab introduces you to Titan Text Premier, where you will explore its ability to summarize small text files, making it easier to condense large amounts of information into concise summaries. You’ll practice applying this technique to different types of documents.

Lab 28: Abstractive Text Summarization

Objective: Master abstractive text summarization techniques.

In this lab, you’ll dive into abstractive summarization, where the goal is to create a condensed version of a document while maintaining its meaning. This lab will cover how to use advanced models for summarization, which may be similar to the Gen AI features in Amazon Bedrock.

Lab 29: Use Amazon Bedrock for Question Answering

Objective: Implement Amazon Bedrock’s question-answering capabilities.

In this lab, you’ll work with Amazon Bedrock’s question-answering model to answer specific questions based on a given dataset. You’ll learn how to frame questions and manage the data flow to get accurate, relevant answers from the model.

Lab 30: Conversational Interface – Chat with Llama 3 and Titan Premier LLMs

Objective: Explore conversational interfaces with Llama 3 and Titan Premier LLMs.

This lab focuses on building conversational interfaces using Amazon Bedrock’s powerful language models. You’ll experiment with Llama 3 and Titan Premier to create engaging chat interactions, focusing on understanding how these models handle natural language processing for dynamic conversations.

Lab 31: Invoke Bedrock Model for Code Generation

Objective: Learn how to use Amazon Bedrock for generating code.

In this lab, you’ll explore how to use Amazon Bedrock to generate code based on natural language descriptions. You will set up the environment, define your code-generation tasks, and learn how to fine-tune the output for accuracy and performance.

Lab 32: Bedrock Model Integration with Langchain Agents

Objective: Integrate Amazon Bedrock with Langchain agents for enhanced functionality.

This lab walks you through the integration of Amazon Bedrock with Langchain agents, allowing you to expand the model’s capabilities. By the end of the lab, you’ll be able to connect the power of Bedrock with Langchain to create sophisticated AI-driven workflows.

1.5 Data Ingestion

Lab 33: Create S3 Bucket, Upload, Access Files, and Host a Website

Objective: Learn how to create an S3 bucket, upload files, and host a static website.

In this lab, you will gain hands-on experience with Amazon S3 by creating a bucket, uploading files, and setting up a static website. You will configure the necessary access permissions and learn how to serve content directly from S3, providing a cost-effective solution for hosting websites.

Related Readings: AWS S3 Bucket | Amazon Simple Storage Service Bucket

Lab 34: S3 Cross Region Replication

Objective: Learn how to replicate your S3 bucket across different AWS regions to ensure data availability and redundancy.

In this lab, you will configure cross-region replication for your S3 bucket, enabling automatic duplication of files to another AWS region. You will explore setting up versioning, configuring the replication rule, and verifying the replication process. This ensures data availability in the event of a regional failure, providing enhanced durability and backup.

By completing this lab, you will be proficient in setting up cross-region replication, a critical skill for ensuring the durability of your data.

Lab 35: S3 Lifecycle Management on S3 Bucket

Objective: Understand how to manage the data lifecycle in S3 by creating policies to automatically transition data to different storage classes and manage its retention.

In this lab, you will learn to create lifecycle policies in S3, which help automate the transition of data between storage classes like Standard, Infrequent Access, and Glacier. You’ll also explore setting up policies for data expiration and deletion, ensuring optimal data management and cost savings. By configuring these policies, you will be able to optimize storage costs based on the data’s usage patterns.

This lab helps you reduce costs by applying lifecycle policies that automate data transition between different S3 storage classes.

Lab 36: Creating and Subscribing to SNS Topics, Adding SNS Event for S3 Bucket

Objective: Learn to create and subscribe to SNS topics and integrate SNS events with S3 buckets.

In this lab, you’ll work with Amazon Simple Notification Service (SNS) to create topics and subscribe endpoints. You will then configure an SNS event to trigger notifications from S3, allowing you to automate event-driven workflows based on changes in your S3 bucket, such as file uploads or deletions.

1.6 Data Integrity, Transformation, Feature Engineering

Lab 37: Preparing Data for TF-IDF with Jupyter Sagemaker Studio

Objective: Learn how to prepare data for machine learning tasks

This lab guides you through using Spark to process and transform data for TF-IDF feature engineering.

By the end of this lab, you will be able to process large datasets efficiently for advanced machine-learning applications.

Lab 38: Exploring AWS Glue DataBrew: Data Preprocessing Without Code

Objective: Learn how to use AWS Glue DataBrew to transform and clean datasets visually.

This lab guides you through using DataBrew to clean, normalize, and transform data without writing any code.

By the end of this lab, you will be able to automate data preparation tasks using DataBrew for improved data quality.

Related Readings: AWS Glue: Overview, Features, Architecture, Use Cases & Pricing

Lab 39: Creating and Running AWS Glue ETL Jobs for Data Transformation

Objective: To create and run AWS Glue ETL jobs to automate the Extract, Transform, and Load (ETL) process, enabling efficient data transformation and seamless storage for analytics and reporting.

This lab efficiently guides you through automating ETL processes using AWS Glue. You’ll step-by-step learn to create a destination S3 bucket for transformed data, set up an ETL job in AWS Glue, transform data with schema adjustments and type conversions, and load the transformed data into the destination bucket. By following this hands-on approach, you’ll simplify data preparation for analytics and reporting while also enhancing efficiency and scalability.

By the end of this lab, you’ll have a clear understanding of how to implement and monitor ETL workflows using AWS Glue, empowering you to handle large datasets with minimal manual intervention and prepare data effectively for business insights.

Related Readings: AWS Glue: Overview, Features, Architecture, Use Cases & Pricing

1.7 Exploratory Data Analysis

Lab 40: Discover Sensitive Data Present in S3 Bucket Using Amazon Macie

Objective: To learn how to use Amazon Macie to automatically discover and protect sensitive data stored in Amazon S3.

This includes creating and managing S3 buckets, enabling Macie, configuring and running Macie jobs to scan for sensitive information like personally identifiable data (PII), reviewing findings, and properly cleaning up resources to stay within AWS Free Tier limits. The lab helps enhance data security and compliance by automating sensitive data discovery at scale.

Related Readings: AWS Macie

Lab 41: Streamlining Data Analysis with No-Code Tools: SageMaker Canvas and Data Wrangler

Objective: Gain hands-on experience with AWS SageMaker Canvas and Data Wrangler for simplified, no-code data analysis.

In this lab, you will explore how AWS SageMaker Canvas and Data Wrangler provide powerful no-code solutions for data analysis and machine learning. You’ll start by using SageMaker Canvas to perform visual data analysis and build machine learning models without writing code. Next, you’ll dive into SageMaker Data Wrangler to clean, transform, and prepare datasets for analysis with an intuitive, drag-and-drop interface.

By the end of this lab, you’ll have a strong understanding of how to streamline your data preparation and model-building workflows using no-code tools, enabling faster insights and decisions without needing deep technical expertise in coding.

Related Readings: Amazon SageMaker AI for Machine Learning: Overview & Capabilities

1.8 Model Training & Tuning

Lab 42: Tuning, Deploying, and Predicting with TensorFlow on SageMaker

Objective: To leverage Amazon SageMaker’s fully managed machine learning environment to build, train, and deploy a deep learning model based on TensorFlow’s Convolutional Neural Network (CNN) architecture.

This model will classify product images with high accuracy to improve inventory management and search functionality for Ubisoft’s e-commerce platform. The process includes preparing the dataset, tuning model hyperparameters for optimal performance, scaling training using GPU instances, and deploying the model for real-time predictions. Ultimately, this solution aims to enhance operational efficiency and user experience by providing fast and reliable image classification

Lab 43: Hyperparameter Optimization Using SageMaker Amazon SageMaker Automatic Model Tuning (AMT)

Objective: Learn how to optimize hyperparameters using Amazon SageMaker Automatic Model Tuning (AMT).

In this lab, you’ll explore the process of hyperparameter optimization with Amazon SageMaker’s Automatic Model Tuning. You will configure AMT to automatically search for the best hyperparameters for your machine learning models, improving their performance. You’ll also understand how to set up tuning jobs and evaluate the results to fine-tune models effectively, enhancing model accuracy and efficiency.

Lab 44: Data Preparation and Automated Model Training with SageMaker Data Wrangler & Autopilot

Objective: Streamline data preparation and automate model training using SageMaker Data Wrangler and Autopilot.

In this lab, you will focus on using SageMaker Data Wrangler to clean and transform datasets for training. You’ll then move on to SageMaker Autopilot, which automates the model-building process, including model selection, hyperparameter tuning, and deployment.

By the end of this lab, you’ll gain a solid understanding of how to automate and accelerate the data preparation and model training phases, making machine learning workflows faster and more efficient.

1.9 Model Deployment and Monitoring with SageMaker

Lab 45: Build, Train, and Deploy Machine Learning Model With Amazon SageMaker

Objective: Learn how to build, train, and deploy machine learning models using Amazon SageMaker.

In this lab, you will gain hands-on experience with the entire machine learning lifecycle in Amazon SageMaker. You’ll start by building and preparing a dataset for training, then proceed to train your model using SageMaker’s built-in algorithms or custom models.

Finally, you’ll deploy the trained model to SageMaker hosting endpoints for real-time predictions, and learn how to monitor and evaluate the model’s performance.

Lab 46: Low-Code Building, Training, and Deploying Models with SageMaker AI Canvas

Objective: Use SageMaker AI Canvas to build, train, and deploy machine learning models with minimal coding.

This lab focuses on SageMaker AI Canvas, a low-code platform that enables you to build and train machine learning models without requiring extensive coding experience. You’ll learn how to import data, select the appropriate model architecture, train the model, and deploy it for predictions—all through a user-friendly, visual interface.

By the end of this lab, you’ll have a solid understanding of how to leverage SageMaker AI Canvas to build end-to-end machine learning solutions with minimal code.

1.10 MLOps On AWS

Lab 47: Create ECR, Install Docker, Create Image, and Push Image To ECR

Objective: In this lab, you will explore the fundamental concepts of Docker and its role in containerization. You will be guided through the process of installing Docker on your local machine, creating and managing containers, and understanding Docker images and how they support containerized applications.

By the end of this lab, you will have a solid understanding of Docker, its installation process, and how to manage containerized applications effectively.

Related Readings: How to Install Docker on Ubuntu: Step-By-Step Guide

Lab 48:Amazon EKS: How to Create a Cluster

Objective: This lab focuses on Amazon Elastic Kubernetes Service (EKS), a managed service that makes it easy to run Kubernetes on AWS.

You will be guided through the process of creating an EKS cluster, deploying applications to the cluster, and scaling them as needed.

By the end of this lab, you will understand how to create and manage EKS clusters and how to deploy containerized applications on them.

Related Readings: Amazon EKS (Elastic Kubernetes Service): Everything You Should Know

Lab 49: Create and Update Stacks Using CloudFormation

Objective: Learn how to create and manage infrastructure using AWS CloudFormation.

In this lab, you’ll dive into AWS CloudFormation, where you will create, update, and manage infrastructure as code. You’ll understand how to write CloudFormation templates, automate the deployment of resources, and track changes to your infrastructure.

This will help you efficiently manage cloud resources at scale using declarative configurations.

Related Readings: AWS CloudFormation: Benefits, Working, and Uses

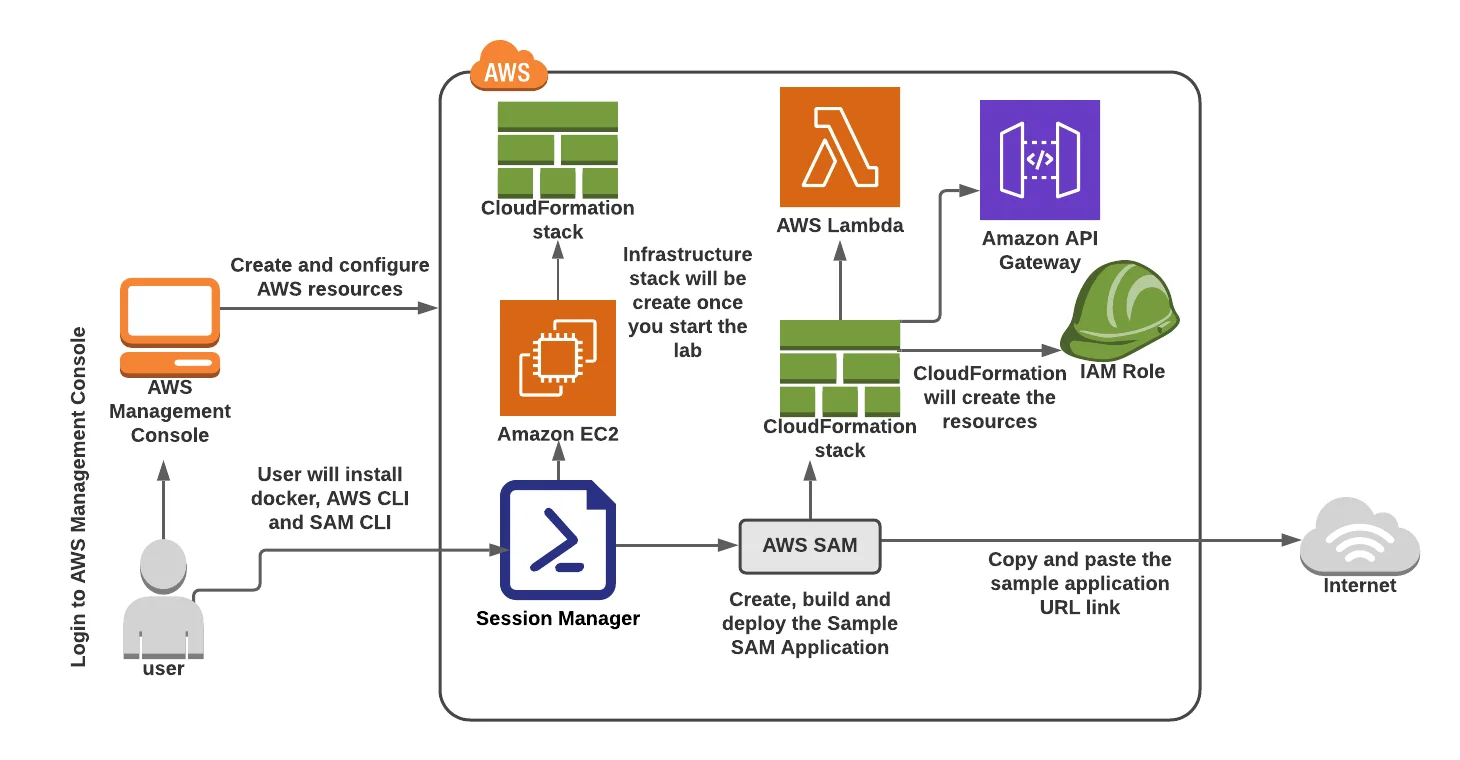

Lab 50: AWS Serverless Application Model (SAM) to Build Serverless Applications

Objective: Build serverless applications using AWS Serverless Application Model (SAM).

This lab introduces you to AWS SAM, a framework for building serverless applications on AWS. You will learn how to use SAM to develop and deploy serverless applications using services such as AWS Lambda, API Gateway, and DynamoDB.

By the end of the lab, you’ll understand how to set up, test, and deploy serverless applications with minimal infrastructure management.

Lab 51: Automate Start/Stop EC2 Instance Using Lambda

Objective: Automate the start and stop of EC2 instances using AWS Lambda.

In this lab, you’ll automate the process of starting and stopping EC2 instances using AWS Lambda functions. You will learn how to create Lambda functions that are triggered based on a schedule, enabling you to control EC2 instance uptime and save on costs.

This is a valuable technique for optimizing AWS resource management.

Lab 52: Build & Deploy Machine Learning Models Using CodePipeline

Objective: Automate the deployment of machine learning models using AWS CodePipeline.

In this lab, you’ll learn how to integrate AWS CodePipeline with machine learning workflows. You will set up an automated pipeline to build, test, and deploy machine learning models.

The pipeline will automate tasks like data preparation, model training, and deployment, providing a streamlined CI/CD approach for machine learning projects.

1.11 Security, Compliance & Governance for AI Solutions

Lab 53: Get Started with AWS X-Ray

Learn how to trace and monitor applications using AWS X-Ray.

This lab guides you through configuring AWS X-Ray to trace requests, analyze performance, and troubleshoot issues in distributed applications.

By the end of this lab, you will be able to use AWS X-Ray for detailed monitoring and analysis of your application performance.

Related Readings: AWS X-Ray Overview, Features and Benefits

Lab 54: Enable CloudTrail and Store Logs in S3

Learn how to enable AWS CloudTrail and store logs in an S3 bucket for auditing and compliance.

This lab guides you through configuring CloudTrail to log API activity and store logs securely in S3.

By the end of this lab, you will be able to audit and track API calls across your AWS account and store logs in S3 for long-term retention.

Related Readings: CloudWatch vs. CloudTrail: Comparison, Working & Benefits

Lab 55: Setting Up AWS Config

Learn how to set up AWS Config to track configuration changes and ensure compliance across your AWS resources.

This lab guides you through configuring AWS Config to monitor and record changes in resource configurations.

By the end of this lab, you will be able to use AWS Config to maintain compliance and monitor the state of your AWS resources over time.

Related Readings: AWS Config: Overview, Benefits, and How to Get Started?

1.12 Deep Learning and Advanced Modeling

Lab 56: Train a Deep Learning Model with AWS Containers

Objective: To set up a secure and optimized AWS environment using AWS Deep Learning Containers on an EC2 instance, enabling the training of a TensorFlow deep learning model.

This involves creating AWS access keys, launching a deep learning instance, configuring secure access via PuTTY, pulling Docker images from Amazon ECR, and running a deep learning training job efficiently while managing resource security and cost.

2. Real-time Projects

Project 1. Predict Uni Admission with SageMaker Autopilot

This project aims to predict the likelihood of university admission using machine learning. Designed for beginners, it introduces automated ML capabilities with AWS SageMaker Autopilot, guiding you through data preprocessing, automated model training, and deployment. You will learn how SageMaker simplifies the ML workflow, enabling you to build effective predictive models with minimal coding.

Project 2. Predicting Customer Churn using ML Model

This project focuses on predicting customer churn to help businesses retain valuable customers. Using AWS SageMaker, you will explore data preparation, feature engineering, model training, and evaluation. The project emphasizes practical application of ML techniques for classification problems and demonstrates how to operationalize models for real-world business impact.

Project 3. Housing Price Prediction with SageMaker Autopilot

This project uses SageMaker Autopilot to build an automated machine learning model that predicts housing prices based on various property and market features. It introduces end-to-end automated ML workflows, including data ingestion, model generation, tuning, and deployment, helping you understand the benefits of AutoML in regression tasks.

Project 4. Credit Card Fraud Detection

Designed to detect fraudulent credit card transactions, this project covers the complete ML lifecycle on AWS. You will work with imbalanced datasets, perform feature engineering, and train classification models using SageMaker. The project highlights practical strategies to improve model accuracy and reliability for fraud detection.

Project 5. Build a RAG Assistant

This project guides you in building a Retrieval-Augmented Generation (RAG) assistant leveraging AWS Bedrock and Foundation Models. You will learn how to combine external knowledge retrieval with generative AI to create intelligent assistants that provide accurate and context-aware responses.

Project 6. Build a Crypto AI Agent using Amazon Bedrock

This project explores building an AI agent using Amazon Bedrock’s Foundation Models to analyze cryptocurrency market data. It covers data ingestion, model customization, and deploying intelligent agents capable of providing market insights and predictions, demonstrating the power of GenAI in financial technology.

Project 7. Real-Time Stock Data Processing

This project implements a real-time data processing pipeline for stock market data using AWS services. You will learn to ingest streaming data, process it, and apply ML models for timely stock trend analysis and prediction, highlighting practical real-time analytics solutions on AWS.

Project 8. Building an End-to-End ML Pipeline using SageMaker

This project demonstrates how to design and deploy a full machine learning pipeline using SageMaker. From data ingestion and preprocessing to model training, hyperparameter tuning, deployment, and monitoring, you will gain hands-on experience automating ML workflows for scalable production environments.

Project 9. MLOps on AWS: ML Pipeline Automation

Focusing on MLOps best practices, this project teaches how to automate machine learning workflows using SageMaker Pipelines and AWS Step Functions. You will build CI/CD pipelines that enable continuous integration, testing, and deployment of ML models for efficient lifecycle management.

Project 10. Image Semantic Segmentation using AWS SageMaker

This project covers training and deploying deep learning models for semantic segmentation tasks using AWS SageMaker. You will learn how to label images, build models that classify each pixel in an image, and deploy solutions for computer vision applications like medical imaging or autonomous driving.

3. Certifications Covered Within The Program

1. AWS Certified Cloud Practitioner (CLF-C02)

This foundational certification validates your understanding of AWS Cloud concepts, core services, security, architecture, pricing, and support. It is ideal for beginners to demonstrate general AWS knowledge and cloud fluency, preparing you for more advanced certifications.

Related Readings: AWS Certified Cloud Practitioner (CLF-C02) Exam – Complete Guide by K21 Academy

2. AWS Certified AI Practitioner (AIF-C01)

Designed for individuals familiar with AI/ML technologies on AWS, this certification covers foundational AI/ML concepts, generative AI, and AWS AI services like SageMaker, Comprehend, and Lex. It suits business analysts, IT support, and project managers exploring AI applications.

Related Readings: Ultimate Guide to AWS Certified AI Practitioner (AIF-C01) 2025: From K21 Academy

3. AWS Certified Machine Learning – Associate (MLA-C01)

This certification focuses on building, training, tuning, and deploying ML models on AWS. It covers data preparation, modeling, and evaluation for those performing development or data science roles.

Related Readings: Prepare for AWS MLA-C01 with K21 Academy: Associate ML Engineer Exam

4. AWS Certified Machine Learning – Specialty (MLS-C01)

An advanced certification validating expertise in designing and deploying ML solutions on AWS. It covers data engineering, exploratory data analysis, model implementation, and operationalizing ML workflows at scale.

Related Readings: AWS Certified Machine Learning- Specialty Certification

Related References

- Join Our Generative AI Whatsapp Community

- Introduction To Amazon SageMaker Built-in Algorithms

- Introduction to Generative AI and Its Mechanisms

- Mastering Generative Adversarial Networks (GANs)

- Generative AI (GenAI) vs Traditional AI vs Machine Learning (ML) vs Deep Learning (DL)

- AWS Certified AI Practitioner (AIF-C01) Certification Exam

- AWS Certified Machine Learning Engineer – Associate (MLA-C01) Exam

Next Task For You

Don’t miss our EXCLUSIVE Free Training on Generative AI on AWS Cloud! This session is perfect for those pursuing the AWS Certified AI Practitioner certification. Explore AI, ML, DL, & Generative AI in this interactive session.

Click the image below to secure your spot!

The post AWS AI/ML: Hands-On Labs & Projects for High-Paying Careers in 2025 appeared first on Cloud Training Program.

Lab 11: Amazon Lex Chatbot Using 3rd Party API

Lab 11: Amazon Lex Chatbot Using 3rd Party API