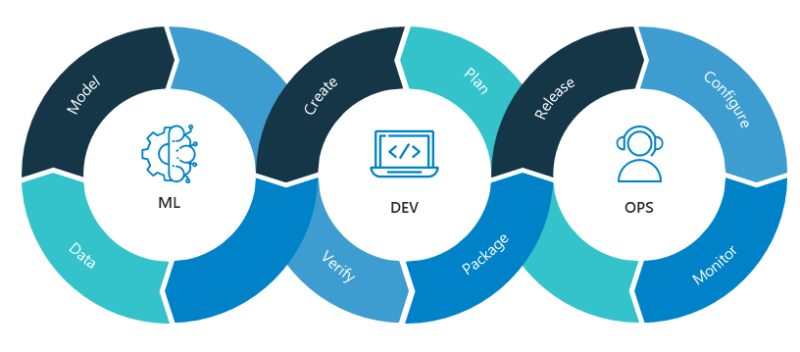

In the fast-evolving world of artificial intelligence (AI) and machine learning (ML), MLOps (Machine Learning Operations) has emerged as a critical framework for managing and deploying ML models efficiently. Machine Learning Operations bridges the gap between data science, development, and operations by integrating DevOps principles into the ML lifecycle.

This blog explores the fundamentals, core principles, benefits, best practices, and key use cases of Machine Learning Operations, providing a comprehensive roadmap for businesses and professionals looking to implement Machine Learning Operations successfully.

![📖]() Table of Contents:

Table of Contents:

What is MLOps?

Machine Learning Operations is a collection of best practices for automating, managing, and streamlining the process of creating, deploying, and maintaining machine learning (ML) models in real-world applications. It integrates machine learning (ML) development with DevOps (software operations) to ensure that ML models run smoothly and consistently in production.

Machine Learning Operations helps organizations:

- Automate repetitive tasks in the ML lifecycle

- Ensure models are continuously updated and monitored

- Standardize workflows for better collaboration between teams

- Improve the reliability and scalability of ML models in production

Machine Learning Operations enable businesses to deploy AI solutions more quickly, decrease errors, and maintain model performance over time, making it an essential component of current AI-driven applications.

Related Readings: Azure Machine Learning Operations

What is the Use of MLOps?

Machine Learning Operations helps bridge the gap between model development and production deployment. It allows organizations to:

- Automate ML Workflows: It ensures that organizations automate ML workflows through the process of taking ML models to production to reduce manual interventions and associated errors.

- Improve Collaboration: It enables better collaboration among data scientists, developers, and operations teams during the ML lifecycle.

- Monitor and Maintain Models: Conduct continuous monitoring for detection of drift issues in the model, and resolution of drift, to sustain the performance of the model.

- Compliance: Provide transparency and traceability during the development and deployment of the model to meet governance and regulatory requirements.

Why do we need MLOps?

To begin the machine learning process, your company normally must first prepare data. You collect data from numerous sources and conduct tasks such as aggregation, duplicate cleansing, and feature engineering.

After that, you’ll utilize the data to train and validate the machine learning model. You can then use the trained and verified model as a prediction service, which other applications can access via APIs.

Exploratory data analysis frequently necessitates experimenting with several models before the optimal model version is ready for deployment. It results in frequent model deployments and data versioning. Experiment tracking and ML training pipeline management are required before your apps may incorporate or use the model in their code.

Machine Learning Operations is essential for managing the deployment of new ML models with modifications to application code and data in a systematic and synchronous manner. An optimum MLOps implementation treats ML assets in the same way that other continuous integration and delivery (CI/CD) environment software assets are handled. As part of a single release process, you deploy ML models alongside the apps and services they support, as well as those that use them.

Related Readings: Machine Learning Life Cycle

What are the core principles of MLOps?

MLOps is built on four key principles that ensure efficient, scalable, and reliable machine learning workflows. These principles help organizations manage ML models effectively and reduce errors, improve automation, and enhance collaboration.

1. Version Control

Why it’s important:

Version control ensures that all ML assets (datasets, models, and code) are tracked and versioned. This makes it easy to reproduce results, rollback to previous versions, and audit changes.

How it works:

- ML training code and model specifications go through a code review process.

- Versioning tools like Git, DVC, and MLFlow track changes in code, data, and models.

- Ensures reproducibility, meaning the same inputs will always produce the same outputs.

Example: If a new model version performs worse than the previous one, you can rollback to an earlier version without losing progress.

2. Automation

Why it’s important:

Automation helps ensure repeatability, consistency, and scalability across the ML lifecycle. It reduces manual work and speeds up the ML development process.

What can be automated?

- Data ingestion and preprocessing

- Model training and validation

- Model deployment

- Infrastructure management (using Infrastructure as Code – IaC)

Triggers for automation:

- Data changes (e.g., new data is available)

- Code updates (e.g., a new ML algorithm is introduced)

- Monitoring alerts (e.g., model performance drops)

- Scheduled retraining (e.g., weekly or monthly updates)

Tools Used: GitHub Actions, Airflow, MLFlow, DVC, Terraform (for IaC).

3. Continuous X (CI/CD/CT/CM)

Machine Learning Operations extends the principles of Continuous Integration and Continuous Deployment (CI/CD) from software engineering to machine learning.

Four Continuous Processes in MLOps:

- Continuous Integration (CI): Automatically tests code, data, and models to ensure quality.

- Continuous Delivery (CD): Deploys new models or services automatically to production.

- Continuous Training (CT): Triggers model retraining whenever data changes.

- Continuous Monitoring (CM): Keeps track of model performance, detects drift, and ensures accuracy over time.

Example: If a model’s accuracy drops below a certain threshold, MLOps can trigger automatic retraining and redeployment.

Tools Used: GitHub Actions, CML (Continuous Machine Learning), Evidently AI, Deepchecks.

4. Model Governance & Compliance

Why it’s important:

ML models must be secure, fair, and aligned with business goals. Governance ensures compliance with regulations and ethical AI practices.

Key aspects of model governance:

- Collaboration: Ensuring data scientists, engineers, and business stakeholders are aligned.

- Documentation: Keeping clear records of model decisions, datasets, and experiments.

- Security & Access Control: Protecting sensitive data and managing who can deploy models.

- Bias & Fairness Checks: Ensuring models do not discriminate against certain groups.

- Model Approval Processes: Reviewing models before they go live, checking for risks and ethical concerns.

Example: Before deploying a credit-scoring model, a bank must check for bias and fairness to avoid discrimination against certain customers.

Tools Used: SHAP (Explainability), Fairlearn (Bias Detection), MLFlow (Model Governance).

What are the benefits of MLOps?

Machine learning enables firms to evaluate data and gain insights for decision-making. However, it is a creative and experimental field, which has its own set of obstacles. Sensitive data protection, limited funds, expertise shortages, and ever-changing technology all hinder project success. Without management and supervision, costs can skyrocket and data science teams may fail to reach their goals.

Here are the key benefits of MLOps, simplified and made easy to understand:

1. Faster Time to Market

The process of creating and implementing machine learning models is accelerated by MLOps. Businesses may launch more quickly and stay ahead of the competition by automating processes like deploying models and setting up infrastructure.

2. Increased Productivity

Teams may operate more effectively with MLOps. Engineers may save time, experiment more quickly, and concentrate on high-value jobs by standardizing settings and reusing models. Better teamwork and faster project completion result from this.

3. Efficient Model Management

MLOps enables teams to efficiently manage models in production. They can monitor performance, track versions, and troubleshoot issues faster. This results in consistent models that perform well over time.

4. Reduced Costs

MLOps contribute to lower operational expenses by automating repetitive processes and increasing productivity. It streamlines operations, resulting in less human work and fewer resources required to maintain the ML lifecycle.

5. Better Collaboration

MLOps improves collaboration among data scientists, engineers, and business teams. Everyone works under a unified framework to improve communication and ensure that the machine learning models correspond with the business needs..

6. Scalability

MLOps makes it easy to scale machine learning models across diverse settings. As the company grows, MLOps ensures that new models and changes can be implemented smoothly without disturbing existing systems.

In short, MLOps enables faster, more efficient, and cost-effective machine learning operations, leading to better results for your business.

What are the components of MLOps?

The scope of MLOps in machine learning initiatives can be as narrow or broad as needed. In some circumstances, MLOps can cover everything from data pipeline to model production, whilst in others, MLOps may only cover the model deployment process. The majority of organizations apply MLOps principles to the following:

- Exploratory Data Analysis (EDA): Exploring and analyzing data to understand patterns and relationships.

- Data Preparation & Feature Engineering: Cleaning and transforming data into features suitable for model training.

- Model Training & Tuning: Training the model and optimizing its performance through parameter adjustments.

- Model Review & Governance: Ensuring models meet quality, compliance, and performance standards before deployment.

- Model Inference & Serving: Deploying models to make predictions on new data in real-time or batch.

- Model Monitoring: Continuously tracking model performance to detect issues or degradation.

- Automated Model Retraining: Automatically retraining models when performance drops or when new data becomes available.

Related Readings: Exploratory Data Analysis (EDA)

Key use cases for MLOps

1. Predictive Maintenance

MLOps is useful in developing and maintaining models that forecast when machinery or equipment may break. These models are constantly monitored, retrained, and updated as new data becomes available, ensuring that forecasts stay accurate and dependable.

2. Fraud Detection

Organizations can implement and update fraud detection models with MLOps. The model can adjust to new fraud trends and continue to work over time with ongoing monitoring and automated retraining.

3. Customer Personalization

E-commerce and streaming platforms use MLOps to deliver personalized experiences by continuously training models on customer behavior. MLOps ensures that the recommendation systems are always up-to-date and optimized for user preferences.

4. Demand Forecasting

MLOps is used in supply chain management to forecast demand for products. It helps to ensure that the forecasting models are regularly updated and perform well even as market conditions change.

5. Healthcare Diagnostics

In healthcare, MLOps allows for continuous model training with new patient data. This helps in developing diagnostic models that can detect diseases early and accurately, with performance monitored to ensure precision.

6. Sentiment Analysis

Businesses use MLOps for real-time sentiment analysis on social media or customer reviews. By automating the retraining of models with new data, the sentiment analysis models stay relevant and accurate over time.

7. Autonomous Vehicles

For autonomous car systems, MLOps is essential because it guarantees that machine learning models that manage navigation, obstacle detection, and decision-making are updated and implemented often to preserve performance and safety.

Related Readings: MLOps Hands-On Labs & Projects for High-Paying Careers in 2025

What are the best practices for MLOps?

Developing and maintaining machine learning models can be complex and time-consuming. Implementing MLOps best practices streamlines the process, improves collaboration, and enhances model performance. Here are the key practices:

1. Automated Dataset Validation

Ensuring the quality of data is crucial for effective ML models. Key practices include:

- Identifying and removing duplicate data

- Handling missing values

- Filtering out outliers

- Removing irrelevant data

Tools like TensorFlow Data Validation (TFDV) automate this process, simplifying data cleansing and anomaly detection, reducing manual work, and enhancing the model’s reliability.

2. Collaborative Work Environment

Fostering collaboration between teams speeds up innovation and reduces redundant work. Creating a centralized hub where all team members can share code, monitor progress, and discuss model deployment ensures smoother workflows.

For example, SCOR, a global reinsurer, built a ‘Data Science Center of Excellence’ that helped them meet customer needs 75% faster.

3. Application Monitoring

After deploying an ML model, continuous monitoring is essential to detect performance issues, like model drift or errors. Monitoring tools track:

- Data quality

- Latency

- Downtime

- Response time

For instance, DoorDash Engineering uses continuous monitoring tools to handle “ML model drift”—ensuring models stay accurate despite data changes.

4. Reproducibility

Keeping a detailed record of the entire ML workflow allows for easy model replication and validation. Establishing a central repository for model artifacts ensures that results can be reproduced by others.

Airbnb’s Bighead platform is an example of maintaining reproducibility by centralizing model development artifacts, allowing for easy iteration and transparency.

5. Experiment Tracking

Data scientists often run multiple experiments to identify the best-performing model. Keeping a systematic record of:

- Scripts

- Datasets

- Model architectures

- Experiment results

Domino’s Enterprise MLOps Platform is an excellent example of a centralized system that tracks all data science efforts, ensuring that the best model is selected for deployment.

What is the difference between MLOps and DevOps?

| Aspect | DevOps | MLOps |

|---|---|---|

| Purpose | Bridges gap between development and operations | Adapts DevOps principles for machine learning projects |

| Scope | Software applications | Machine learning models and related processes |

| Focus | Automates testing, integration, and deployment of code | Automates the entire ML lifecycle from data collection to deployment and retraining |

| Challenges | Scaling infrastructure, uptime, performance monitoring | Managing data quality, model drift, model retraining |

| Automation | Automates code deployment and integration | Automates model development, monitoring, and retraining |

| Collaboration | Collaboration between development and operations teams | Collaboration between data scientists, ML engineers, and software teams |

| Outcome | Faster release cycles, improved application quality, efficient resource use | Faster deployment of ML models, better model accuracy, stronger business value assurance |

Related Readings: What Is DevOps?

Conclusion

In a nutshell MLOps is a revolutionary methodology that speeds up the development, deployment, and management of ML models while maintaining alignment with business goals and producing reliable, superior outcomes. It is not only a collection of procedures.

FAQs

1. What is MLOps, and why is it important?

MLOps (Machine Learning Operations) is the practice of streamlining the development, deployment, and maintenance of machine learning models in production. It integrates DevOps principles with machine learning workflows to ensure scalability, reliability, and efficiency.

2. How is MLOps different from DevOps?

While DevOps focuses on software development and operations, MLOps extends these principles to machine learning, managing data pipelines, model training, monitoring, and retraining.

3. What are the key benefits of MLOps?

MLOps helps organizations by:  Automating ML workflows

Automating ML workflows  Improving model reliability and scalability

Improving model reliability and scalability  Enhancing collaboration between data scientists and engineers

Enhancing collaboration between data scientists and engineers  Reducing operational costs

Reducing operational costs  Ensuring compliance and governance

Ensuring compliance and governance

Next Task For You

Don’t miss our EXCLUSIVE Free Training on MLOps!  This session is ideal for aspiring Machine Learning Engineers, Data Scientists, and DevOps Professionals looking to master the art of operationalizing machine learning workflows. Dive into the world of MLOps with hands-on insights on CI/CD pipelines, ML model versioning, containerization, and monitoring.

This session is ideal for aspiring Machine Learning Engineers, Data Scientists, and DevOps Professionals looking to master the art of operationalizing machine learning workflows. Dive into the world of MLOps with hands-on insights on CI/CD pipelines, ML model versioning, containerization, and monitoring.

Click the image below to secure your spot!

The post What is MLOps? – Everything You Need to Know appeared first on Cloud Training Program.

Table of Contents:

Table of Contents: