In this blog, we will look at what steps are taken into consideration while deploying Azure Data Bricks Model in Azure Machine Learning.

Azure Databricks

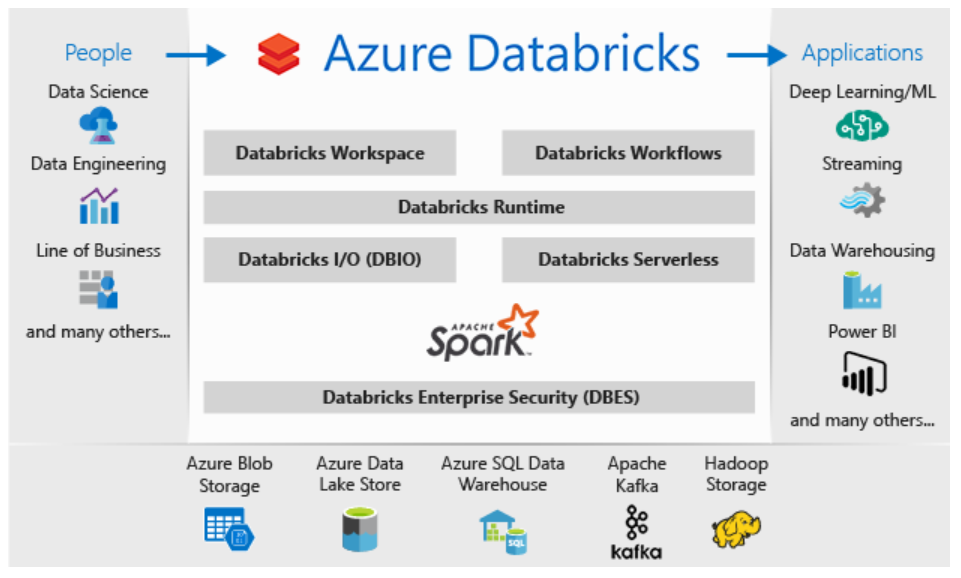

Azure Databricks is a Microsoft analytics service, part of the Microsoft Azure cloud platform. It offers integration between Microsoft Azure and Apache Spark’s Databricks implementation. Azure Databricks natively integrates with Azure security and data services.

Prepare Data for Machine Learning with Azure Databricks

Raw data is often noisy and unreliable and may contain missing values and outliers. Using such data for Machine Learning can produce misleading results. Thus, data cleaning of the raw data is one of the most important steps in preparing data for Machine Learning. As a Machine Learning algorithm learns the rules from data, having clean and consistent data is an important factor in influencing the predictive abilities of the underlying algorithms.

Train a Machine Learning Model

To train a machine learning model with Azure Databricks, data scientists can use the Spark ML library. In this module, you learn how to train and evaluate a machine learning model using the Spark ML library as well as other machine learning frameworks.

Training a model relies on three key abstractions: a transformer, an estimator, and a pipeline.

After training your model on Azure Databricks Compute, you may want to deploy your model so that it can be consumed by your business or end-user. You can easily deploy your model by using Azure Machine Learning. In this blog, you will learn how to deploy models using Azure Databricks and Azure Machine Learning.

In machine learning, Model Deployment can be considered as a process by which you integrate your trained machine learning models into a production environment such that your business or end-user applications can use the model predictions to make decisions or gain insights into your data. The most common way you deploy a model using Azure Machine Learning from Azure Databricks is to deploy the model as a real-time inferencing service. Here the term inferencing refers to the use of a trained model to make predictions on new input data on which the model has not been trained.

What is Real-Time Inferencing?

The model is deployed as part of a service that enables applications to request immediate, or real-time, predictions for individual, or small numbers of data observations.

In Azure Machine learning, you can create real-time inferencing solutions by deploying a model as a real-time service, hosted in a containerized platform such as Azure Kubernetes Services (AKS)

Plan for Azure Machine Learning deployment endpoints

After you have trained your machine learning model and evaluated it to the point where you are ready to use it outside your own development or test environment, you need to deploy it somewhere. Azure Machine Learning service simplifies this process. You can use the service components and tools to register your model and deploy it to one of the available compute targets so it can be made available as a web service in the Azure cloud, or on an IoT Edge device.

Available compute targets

You can use the following compute targets to host your web service deployment:

| Compute target | Usage | Description |

|---|---|---|

| Local web service | Testing/debug | Good for limited testing and troubleshooting. |

| Azure Kubernetes Service (AKS) | Real-time inference | Good for high-scale production deployments. Provides autoscaling, and fast response times. |

| Azure Container Instances (ACI) | Testing | Good for low scale, CPU-based workloads. |

| Azure Machine Learning Compute Clusters | Batch inference | Run batch scoring on serverless compute. Supports normal and low-priority VMs. |

| Azure IoT Edge | (Preview) IoT module | Deploy & serve ML models on IoT devices. |

Deploy a model to Azure Machine Learning

As we discussed in the previous unit, you can deploy a model to several kinds of compute target: including local compute, an Azure Container Instance (ACI), an Azure Kubernetes Service (AKS) cluster, or an Internet of Things (IoT) module. Azure Machine Learning uses containers as a deployment mechanism, packaging the model and the code to use it as an image that can be deployed to a container in your chosen compute target.

To deploy a model as an inferencing web service, you must perform the following tasks:

- Register a trained model.

- Define an Inference Configuration.

- Define a Deployment Configuration.

- Deploy the Model.

1. Register a trained model

After successfully training a model, you must register it in your Azure Machine Learning workspace. Your real-time service will then be able to load the model when required.

To register a model from a local file, you can use the register method of the Model object as shown here:

from azureml.core import Model

model = Model.register(workspace=ws,

model_name='nyc-taxi-fare',

model_path='model.pkl', # local path

description='Model to predict taxi fares in NYC.')

2. Define an Inference Configuration

The model will be deployed as a service that consists of:

- A script to load the model and return predictions for submitted data.

- An environment in which the script will be run.

You must therefore define the script and environment for the service.

Creating an Entry Script

Create the entry script (sometimes referred to as the scoring script) for the service as a Python (.py) file. It must include two functions:

- init(): Called when the service is initialized.

- run(raw_data): Called when new data is submitted to the service.

Typically, you use the init function to load the model from the model registry and use the run function to generate predictions from the input data. The following example script shows this pattern:

import json

import joblib

import numpy as np

from azureml.core.model import Model

# Called when the service is loaded

def init():

global model

# Get the path to the registered model file and load it

model_path = Model.get_model_path('nyc-taxi-fare')

model = joblib.load(model_path)

# Called when a request is received

def run(raw_data):

# Get the input data as a numpy array

data = np.array(json.loads(raw_data)['data'])

# Get a prediction from the model

predictions = model.predict(data)

# Return the predictions as any JSON serializable format

return predictions.tolist()

Combining the Script and Environment in an InferenceConfig

After creating the entry script and environment, you can combine them in an InferenceConfig for the service like this:

from azureml.core.model import InferenceConfig

from azureml.core.model import InferenceConfig

inference_config = InferenceConfig(entry_script='score.py',

source_directory='.',

environment=myenv)

3. Define a Deployment Configuration

Now that you have the entry script and environment, you need to configure the compute to which the service will be deployed. If you are deploying to an AKS cluster, you must create the cluster and a compute target for it before deploying:

from azureml.core.compute import ComputeTarget, AksCompute cluster_name = 'aks-cluster' compute_config = AksCompute.provisioning_configuration(location='eastus') production_cluster = ComputeTarget.create(ws, cluster_name, compute_config) production_cluster.wait_for_completion(show_output=True)

With the compute target created, you can now define the deployment configuration, which sets the target-specific compute specification for the containerized deployment:

from azureml.core.webservice import AksWebservice

deploy_config = AksWebservice.deploy_configuration(cpu_cores = 1,

memory_gb = 1)

The code to configure an ACI deployment is similar, except that you do not need to explicitly create an ACI compute target, and you must use the deploy_configuration class from the azureml.core.webservice.AciWebservice namespace. Similarly, you can use the azureml.core.webservice.LocalWebservice namespace to configure a local Docker-based service.

4. Deploy the Model

After all of the configuration is prepared, you can deploy the model. The easiest way to do this is to call the deploy method of the Model class, like this:

from azureml.core.model import Model

service = Model.deploy(workspace=ws,

name = 'nyc-taxi-service',

models = [model],

inference_config = inference_config,

deployment_config = deploy_config,

deployment_target = production_cluster)

service.wait_for_deployment(show_output = True)

For ACI or local services, you can omit the deployment_target parameter (or set it to None).

Troubleshoot model deployment

There are a lot of elements to service deployment, including the trained model, the runtime environment configuration, the scoring script, the container image, and the container host. Troubleshooting a failed deployment, or an error when consuming a deployed service can be complex.

Check the service state

As an initial troubleshooting step, you can check the status of a service by examining its state:

from azureml.core.webservice import AksWebservice # Get the deployed service service = AksWebservice(name='classifier-service', workspace=ws) # Check its state print(service.state)

To view the state of a service, you must use the compute-specific service type (for example AksWebservice) and not a generic WebService object.

For an operational service, the state should be Healthy.

Review service logs

If a service is not healthy, or you are experiencing errors when using it, you can review its logs:

print(service.get_logs())

The logs include detailed information about the provisioning of the service, and the requests it has processed, and can often provide an insight into the cause of unexpected errors.

Deploy to a local container

Deployment and runtime errors can be easier to diagnose by deploying the service as a container in a local Docker instance, like this:

from azureml.core.webservice import LocalWebservice deployment_config = LocalWebservice.deploy_configuration(port=8890) service = Model.deploy(ws, 'test-svc', [model], inference_config, deployment_config)

You can then test the locally deployed service using the SDK:

print(service.run(input_data = json_data))

You can then troubleshoot runtime issues by making changes to the scoring file that is referenced in the inference configuration, and reloading the service without redeploying it (something you can only do with a local service):

service.reload() print(service.run(input_data = json_data))

Also Read: Download Our blog post on DP 100 Exam questions and Answers. Click here

Related/References:

- [DP-100] Microsoft Certified Azure Data Scientist Associate: Everything you must know

- [AI-900] Microsoft Certified Azure AI Fundamentals Course: Everything you must know

- Microsoft Certified Azure Data Scientist Associate | DP 100 | Step By Step Activity Guides (Hands-On Labs)

- Automated Machine Learning | Azure | Pros & Cons

- Azure Machine Learning Studio

- Datastores And Datasets In Azure

- Object Detection And Tracking In Azure Machine Learning

- Overview of Hyperparameter Tuning In Azure

- Prepare Data for Machine Learning with Azure Databricks

- Microsoft Azure Data Scientist DP-100 FAQ

Join FREE Masterclass

To know more about AI, ML, Data Science for beginners, why you should learn, Job opportunities, and what to study Including Hands-On labs you must perform to clear [DP-100] Azure Data Scientist Associate.

Click on the below image to Register Our FREE Masterclass on Microsoft Azure Data Scientist Certification [DP-100] & Live Demo With Q/A Now!

The post Deploy Azure Databricks Model in Azure Machine Learning appeared first on Cloud Training Program.