In machine learning, models are trained to predict unknown labels for new data based on correlations between known labels and features found in the training data. Depending on the algorithm used, you may need to specify hyperparameters to configure how the model is trained.

In this blog, we are going to cover the basics of hyperparameters, hyperparameter tuning, search space, and how to tune hyperparameters in Azure.

What Are Hyperparameters?

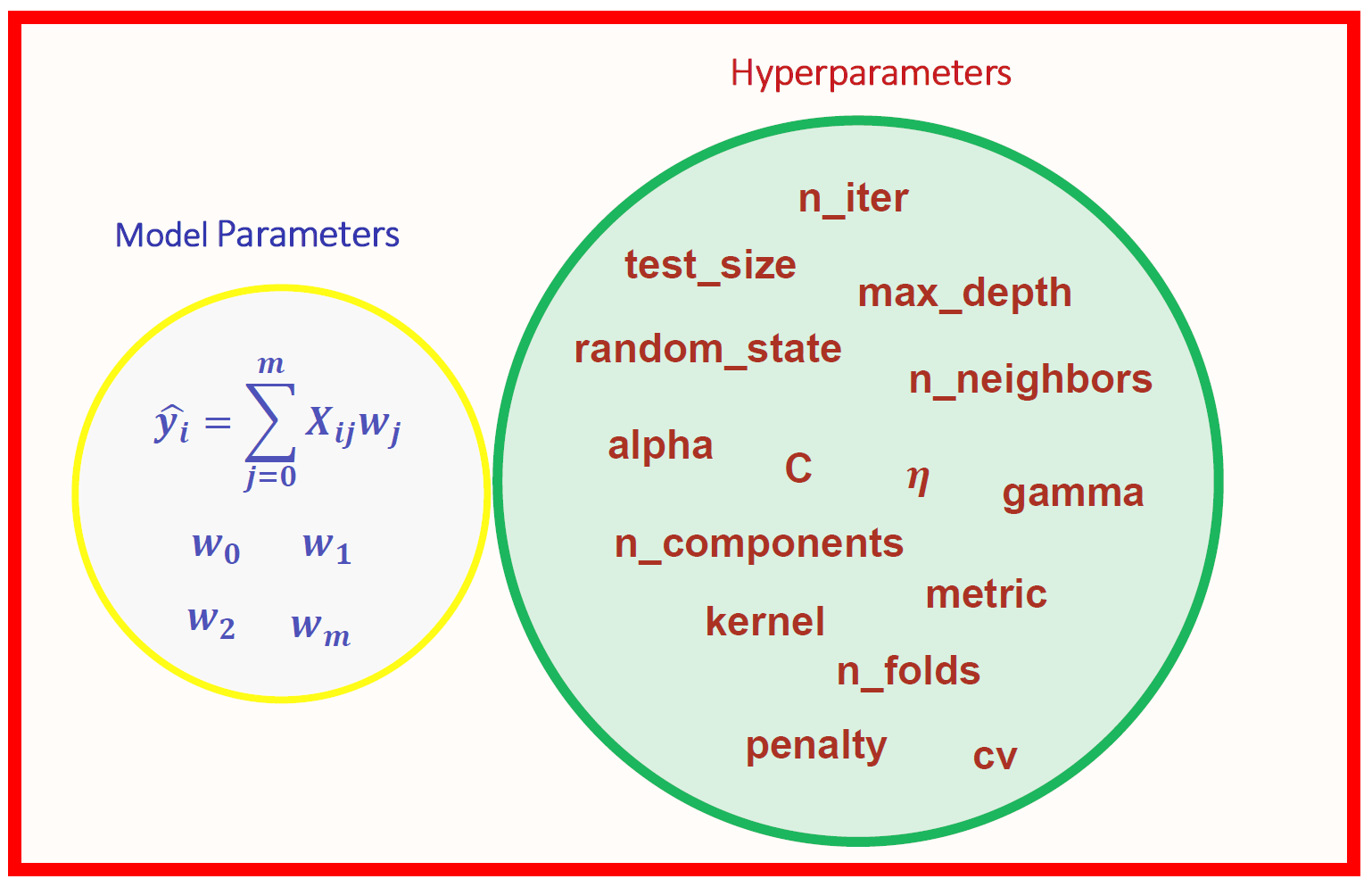

There are two types of parameters in machine learning:

Model Parameters are parameters in the model that must be determined using the training data set. These are the fitted parameters. For Eg: eights and biases, or split points in the Decision Tree, and more.

Hyperparameters are adjustable parameters that control the model training process. Model performance depends heavily on hyperparameters.

Note: Do Checkout Our Blog Post On MLOps.

Selecting good hyperparameters has the following advantage:

- Efficient search across the space of possible hyperparameters

- Easy management of a large set of experiments for hyperparameter tuning.

Also read: Azure Machine Learning Service is a fully managed cloud service that is used to train, deploy, and manage machine learning models.

What Is Hyperparameter Tuning?

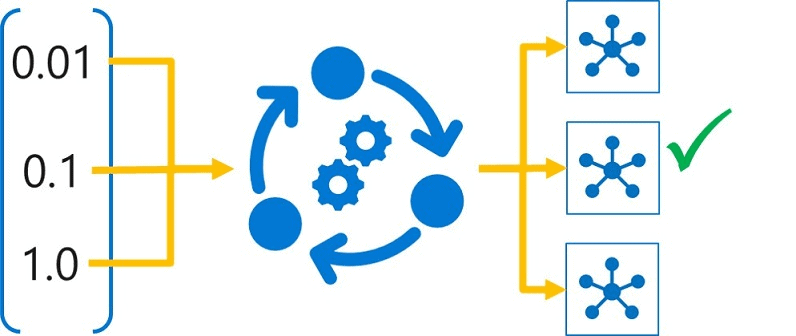

Hyperparameter tuning is the process of finding the configuration of hyperparameters that will result in the best performance. The process is computationally expensive and a lot of manual work has to be done. It is accomplished by training the multiple models, using the same algorithm and training data but different hyperparameter values. The resulting model from each training run is then evaluated to determine the performance metric for which you want to optimize (for example, accuracy), and the best-performing model is selected.

Read about: What is the difference between Data Science vs Data Analytics.

Search Space

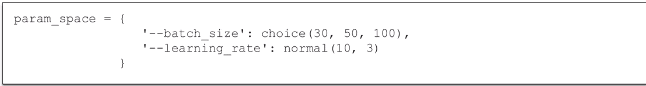

The set of hyperparameter values tried during hyperparameter tuning is known as the search space. The definition of the range of possible values that can be chosen depends on the type of hyperparameter.

Hyperparameters can be discrete or continuous and have a distribution of values described by a parameter expression.

Note: Do Read Our Blog Post on Azure Machine Learning Model.

Discrete Hyperparameter: a search space for a discrete parameter using a choice from a list of explicit values, which can be defined as-

- an arbitrary list object (choice ([10,20,20]))

- a range object (choice (range (1,10))), and

- one or more comma-separated values (choice(20,60,100))

Also Visit: Our Blog Post To Know About DP- 100 FAQ

Continuous Hyperparameter: The Continuous hyperparameters are specified as a distribution over a continuous range of values. To define a search space for these kinds of value, any of the following distribution types can be used:

- uniform (low, high)

- loguniform (low, high)

- normal (mu, sigma)

- lognormal (mu, sigma)

Also Visit: Our Blog Post To Get An Overview Of DP-900 vs DP-100 DP-200

Hyperparameter Sampling

Hyperparameter sampling refers to specifying the parameter sampling method to use over the hyperparameter space. The following methods are supported by Azure Machine Learning:

- Random sampling

- Grid sampling

- Bayesian Sampling

Also Read our previous blog on Microsoft Azure Object Detection.

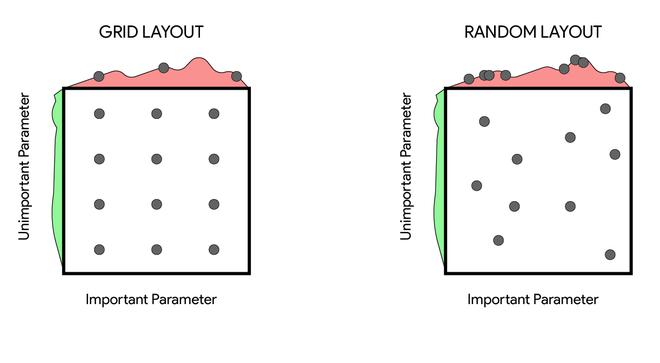

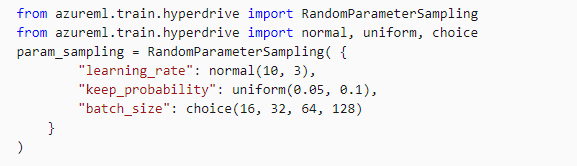

Random Sampling: Random Sampling supports both discrete and continuous hyperparameters and these values are randomly defined in the search space. It supports the early termination of low-performance runs.

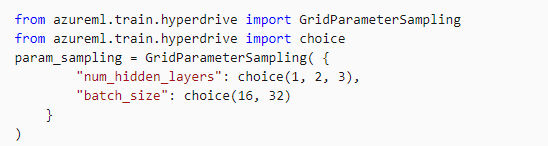

Grid Sampling: Grid Sampling supports only discrete hyperparameters and can be used only with Choice hyperparameters. It supports early termination of low-performance runs and is generally used when the user can budget to exhaustively search over the search space.

Check Out: Our blog post on Automated Machine Learning. Click here

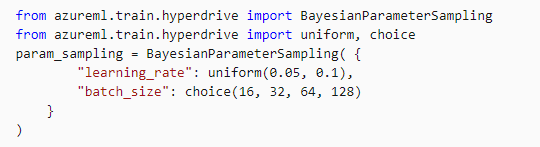

Bayesian Sampling: It supports choice, uniform, and quniform distributions over the search space and is based on the bayesian optimization algorithm.

Also Check: Our blog post on DP 100 questions. Click here

Early Termination Policy

Due to a sufficiently large hyperparameter search space, it could take many iterations (child runs) to try every possible combination. Traditionally, we have to set a maximum number of iterations, but this could still result in a large number of runs that may not result in a better model than a combination that has been already tried.

To prevent wasting time, there is a facility to set an early termination policy that abandons runs that are unlikely to produce a better result than previously completed runs.

Azure Machine Learning supports the following four early termination policies:

- Bandit Policy

- Median Stopping Policy

- Truncation Selection Policy

- No Termination Policy

Also read: Learn more about Azure Data Stores & Azure Data Sets

How To Tune Hyperparameters In Azure

Now that we have understood what are hyperparameters are and the terms related to it, let’s check how we can tune the hyperparameters in a machine learning model in Azure.

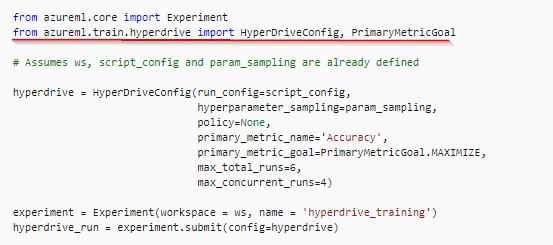

In Azure Machine Learning, you can tune hyperparameters by running a hyperdrive experiment.

These are the three steps that are to be followed once the Azure Environment is set i.e., the compute targets are created, the dataset is imported and the DP 100 User (Notebook) folder is cloned in the Jupyter.

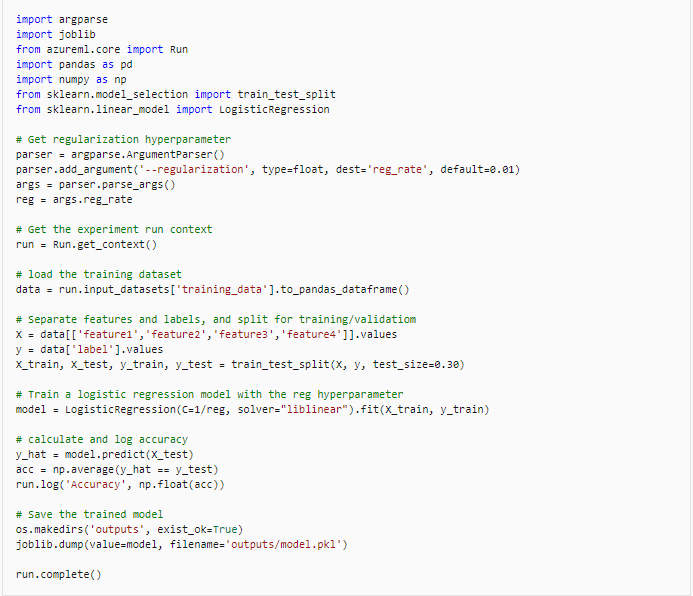

1.) The first step is to Create a training script for the experiment.

Also Check: Our previous blog post on Azure Load Balancer. Click here

2.) After creating the script you can configure and run the experiment, but you must use a HyperDriveConfig object to configure the experiment run.

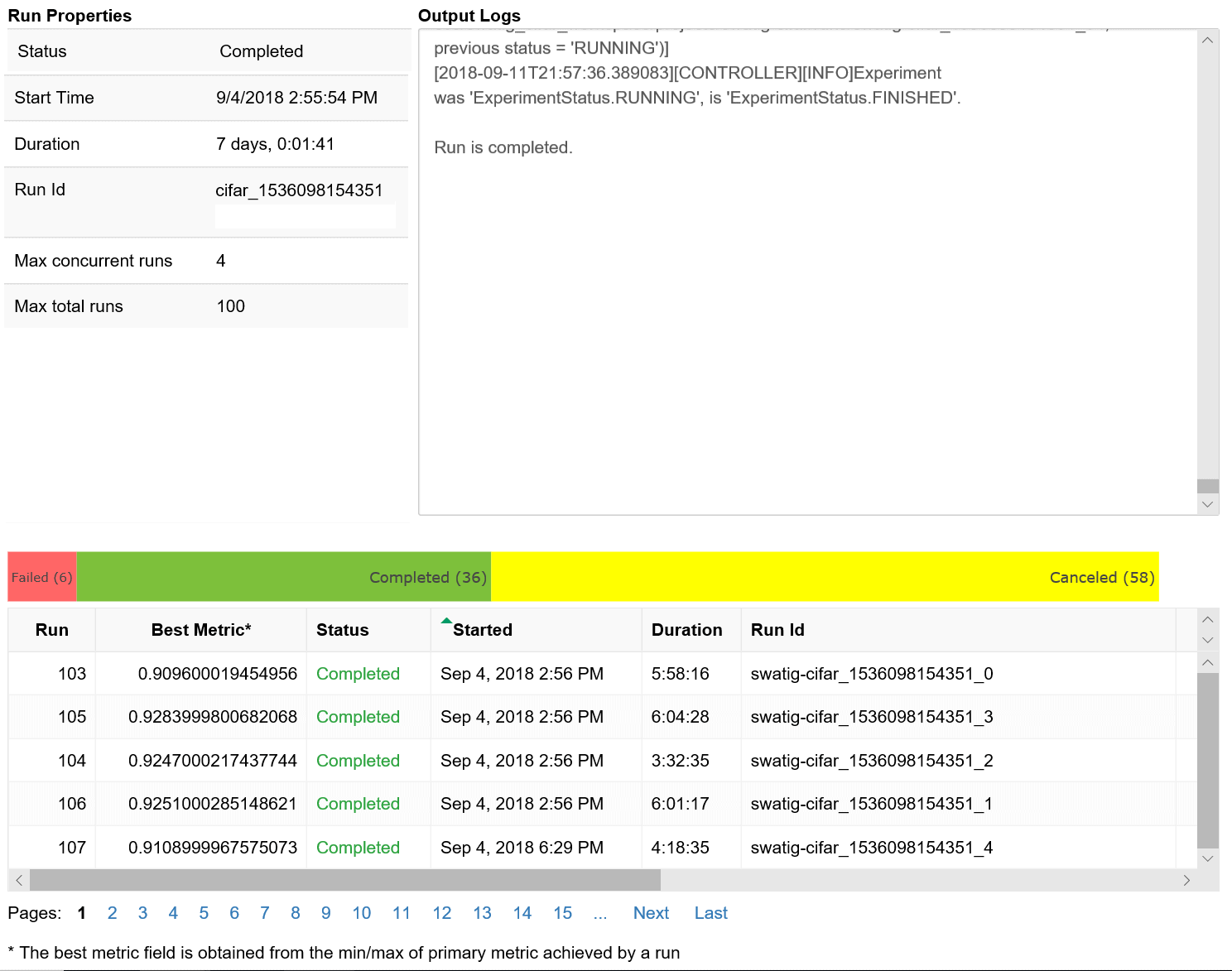

3.) After running the experiment, you can monitor the Hyperdrive Experiment in Azure Machine Learning studio, or by using the Jupyter Notebooks RunDetails widget.

Also Read: Our previous blog post on AWS Sagemaker. Click here

4.) You can also visualize the Training run in the Jupyter Notebooks.

So this is how you can tune Hyperparameters in the Azure Portal or Jupyter notebook.

Read more: Basics of the Convolutional Neural Network (CNN) and how we train our CNN’s model on Azure ML service without knowing to code.

Related/References:

- [DP-100] Microsoft Certified Azure Data Scientist Associate: Everything you must know

- Microsoft Certified Azure Data Scientist Associate | DP 100 | Step By Step Activity Guides (Hands-On Labs)

- [AI-900] Microsoft Certified Azure AI Fundamentals Course: Everything you must know

- Automated Machine Learning | Azure | Pros & Cons

- [AI-900] Azure Machine Learning Studio

- Azure Machine Learning Service Workflow for Beginners

Next Task For You

We have a complete module dedicated to Hyperparameters and how we can tune them in Azure in the DP-100 course.

To know more about the course, AI, ML, Data Science for beginners, why you should learn, Job opportunities, and what to study Including Hands-On labs you must perform to clear [DP-100] Microsoft Azure Data Scientist Associate Certification register for our FREE CLASS.

The post Overview of Hyperparameter Tuning In Azure appeared first on Cloud Training Program.