MLOps or Machine Learning Operations is based on DevOps principles and practices that increase the efficiency of workflows and improves the quality and consistency of the machine learning solutions.

In this blog, we are going to learn more about MLOps, architecture describing how to implement continuous integration (CI), continuous delivery (CD), and retraining pipeline for an AI application using Azure Machine Learning and Azure DevOps, MLOps pipelines, and more.

MLOps is covered in our DP-100 Design & Implement a Data Science solution on Azure training.

Overview of MLOps

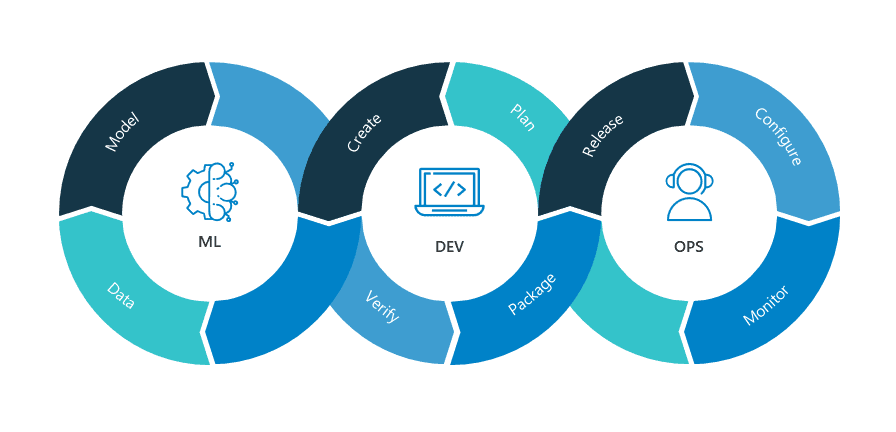

MLOps = ML + DEV + OPS

MLOps is a Machine Learning engineering culture and practice that aims at unifying ML system development (Dev) and ML system operation (Ops). It applies the DevOps principles and practices like continuous integration, delivery, and deployment to the machine learning process, with an aim for faster experimentation, development, and deployment of Azure machine learning models into production and quality assurance.

Also Read : Our Blog Post On Convolution Neural Network.

Here is a list of MLOps capabilities provided by Azure Machine Learning

- Create reproducible ML pipelines

- Create reusable software environments

- Register, package, and deploy models from anywhere

- Capture the governance data for the end-to-end ML lifecycle

- Notify and alert on events in the ML lifecycle

- Monitor ML applications for operational and ML-related issues

- Automate the end-to-end ML lifecycle with Azure Machine Learning and Azure Pipelines

Check out: Machine learning is a subset of Artificial Intelligence. It is the process of training a machine with specific data to make inferences. In this post, we are going to cover everything about Automated Machine Learning in Azure.

Also Read : Our Blog Post To Know About Most Important DP- 100 FAQ

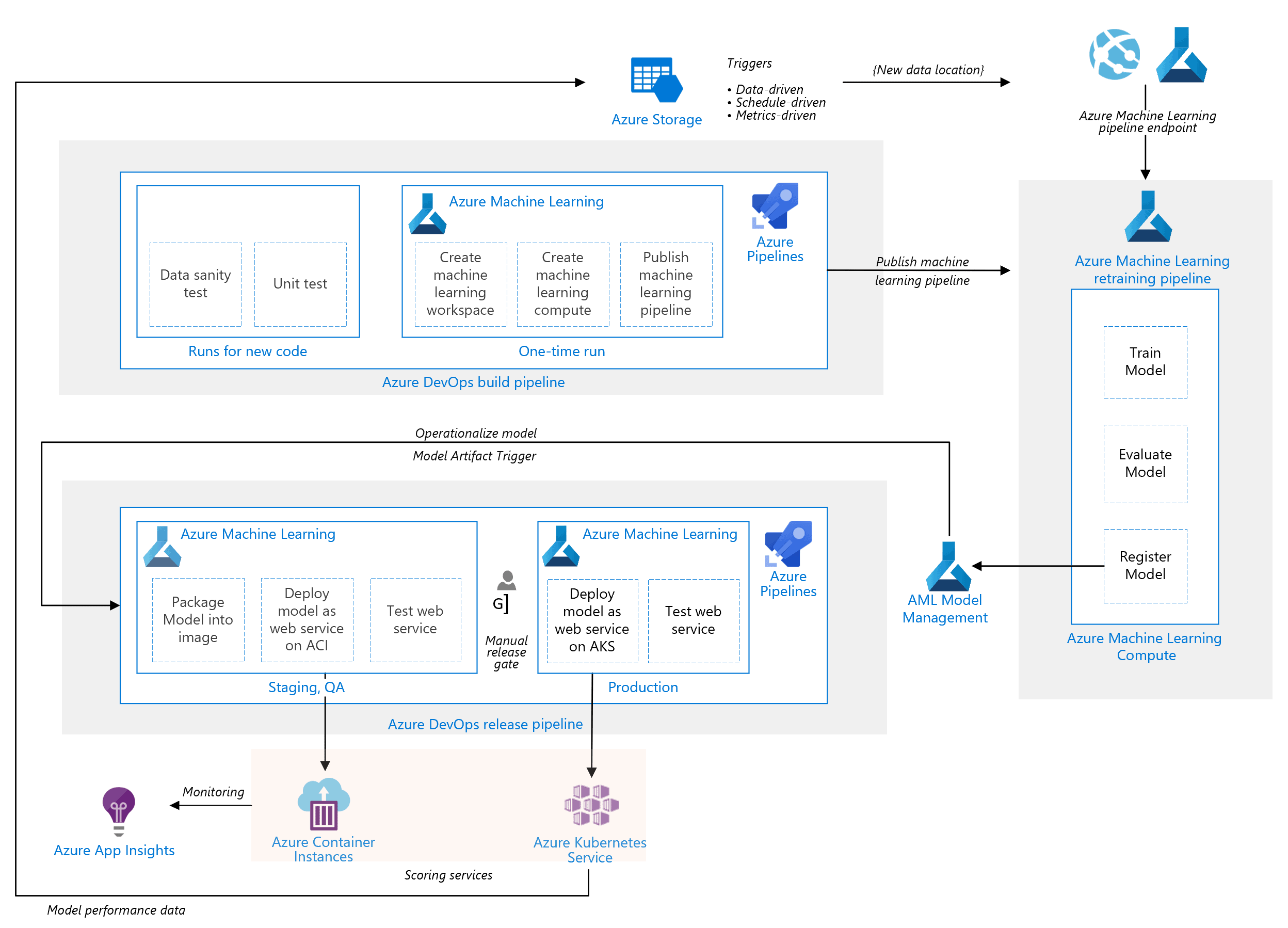

The Architecture Of MLOps For Python Models Using Azure ML Service

This architecture describes how we can implement continuous integration (CI), continuous delivery (CD), and retraining pipeline for an AI application using Azure DevOps and Azure Machine Learning.

Also Read: What is the difference between Data Science vs Data Analytics.

Also Check: Our Blog Post To Get An Overview Of DP-900 vs DP-100 vs DP-200 vs DP-201

It has the following components:

1.) Azure Pipelines- The build and test system is based on Azure DevOps and used for the build and release pipelines. Azure Pipelines break these pipelines into logical steps called tasks.

2.) Azure Machine Learning- This architecture uses the Azure Machine Learning SDK for Python to create a workspace (space for an experiment), compute resources, and more.

3.) Azure Machine Learning Compute- It is a cluster of virtual machines where a training job is executed.

4.) Azure Machine Learning Pipelines- It provides reusable machine learning workflows. It is published or updated at the end of the build phase and is triggered on new data arrival.

5.) Azure Blob Storage- The Blob containers are used for storing the logs from the scoring service. In the above case, both the input data and model predictions are collected.

6.) Azure Container Registry- The scoring Python script is packaged as a Docker image and versioned in this registry.

7.) Azure Container Instances- As part of the release pipeline, QA and staging environment is mimicked by deploying the scoring web service image to Container Instances, which provides an easy and serverless way to run a container.

8.) Azure Kubernetes Service- It eases the process of deploying a managed Kubernetes cluster in Azure.

9.) Azure Application Insights- It is a monitoring service used for detecting performance anomalies.

Also read: Learn more about Azure Data Stores & Azure Data Sets

MLOps Pipelines

The architecture shown in the above image is based on 3 pipelines:

1.) Build Pipeline- The CI pipeline gets triggered every time the code is checked in and publishes an updated Azure Machine Learning pipeline after building the code and running tests. It performs the following tasks:

- Code Quality

- Unit Test

- Data Test

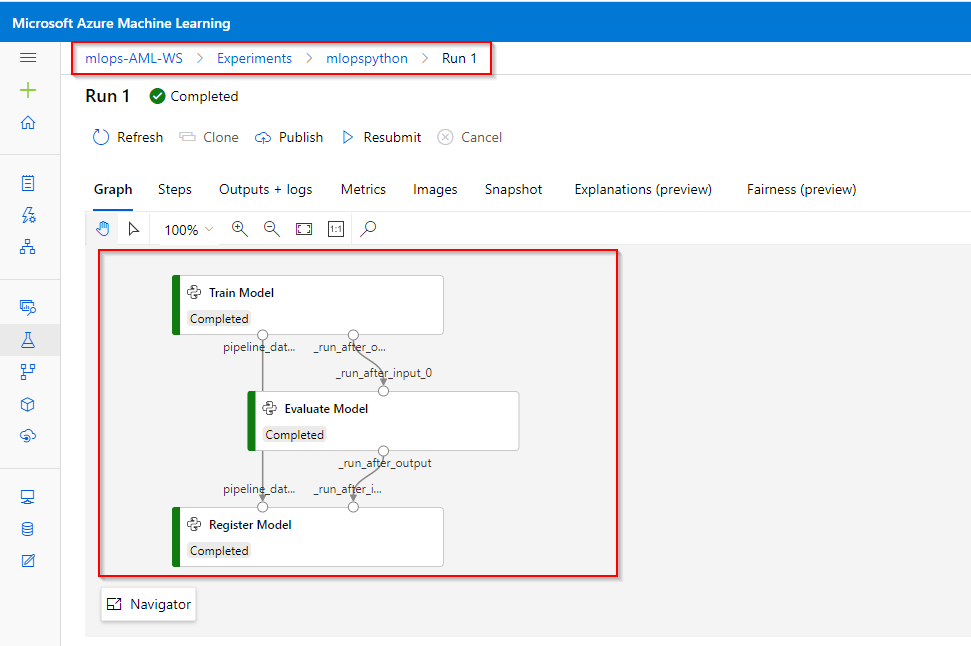

2.) Retraining Pipeline- It retrains the model on schedule or when there is new data available. It covers the following steps:

- Train Model

- Evaluate Model

- Register Model

3.) Release Pipeline- It operationalizes the scoring image and promotes it safely across either the QA environment or the Production environment.

Check Out: Our blog post on DP 100 questions. Click here

Getting Started With MLOpsPython

Let’s look at the steps of how we can get MLOpsPython working with a sample ML project diabetes_regression. In this project, we will create a linear regression model to predict diabetes and has CI/CD DevOps practices enabled for model training and serve when these steps are completed.

Step 1: Sign In to the Microsoft Azure and DevOps portal. (You can use the same ID to log in for both the portals)

Note: If you do not have an Azure account then please create one before moving forward with the steps. You can Check out our blog to know more about how to create an Azure free trial account.

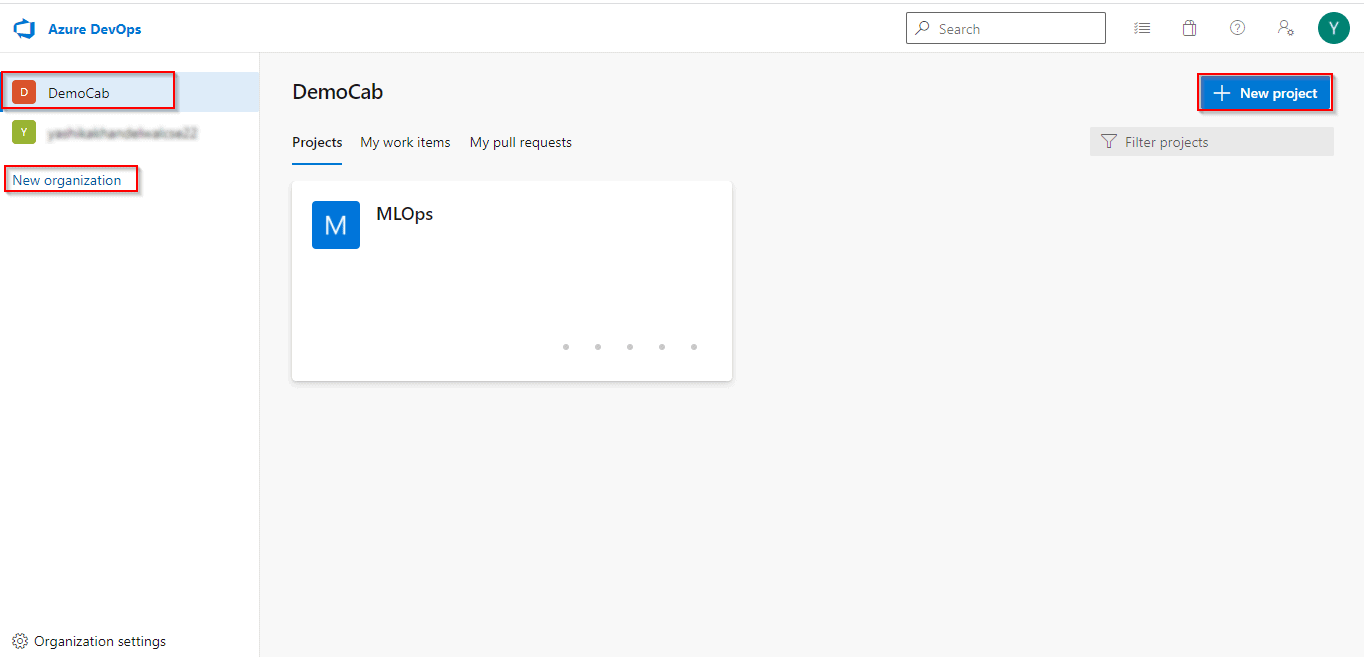

Step 2: In the DevOps portal, if you are a first-time user then create a New Organization and then click on New Project. Give Project Name and keep Visibility as Private

Step 3: A new project will be created. Now we require the code for the project for which we will use GitHub. You can get the code from the MLOpsPython document.

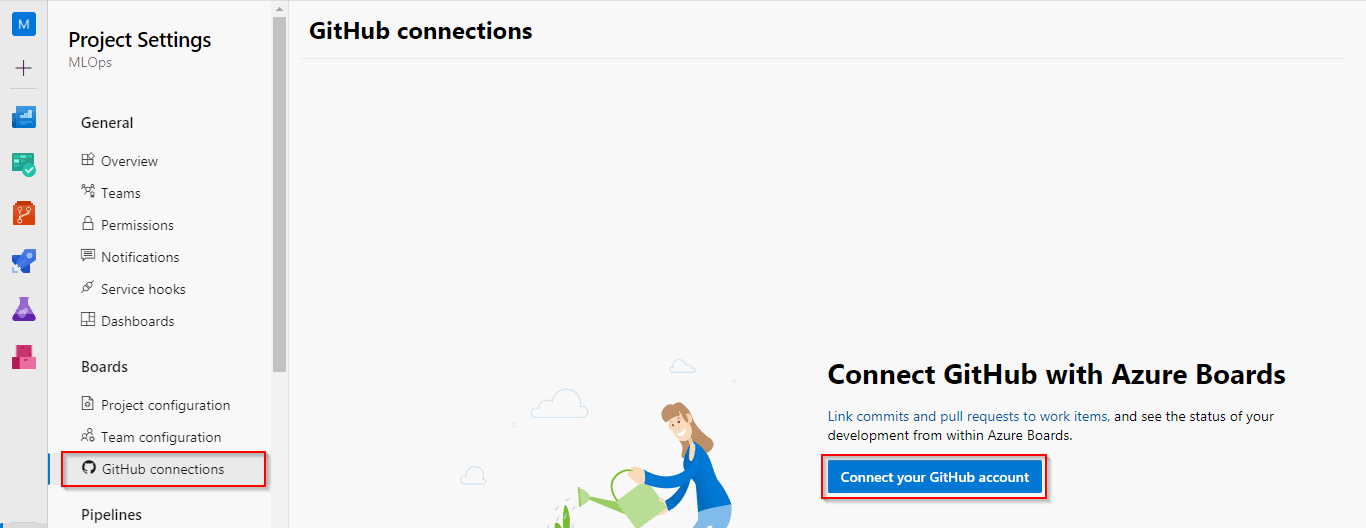

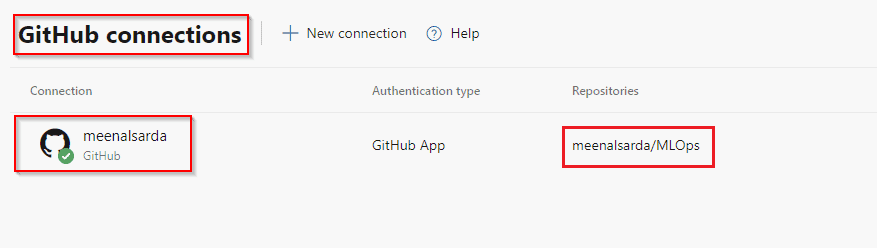

To add the code to our project, click on Project Settings, select GitHub Connections, and then connect to your GitHub account (It might ask you to sign-in to your GitHub account first).

Step 4: After connecting to the GitHub account, select the repository where you have the code.

Read More: About Microsoft Azure Object Detection. Click Here

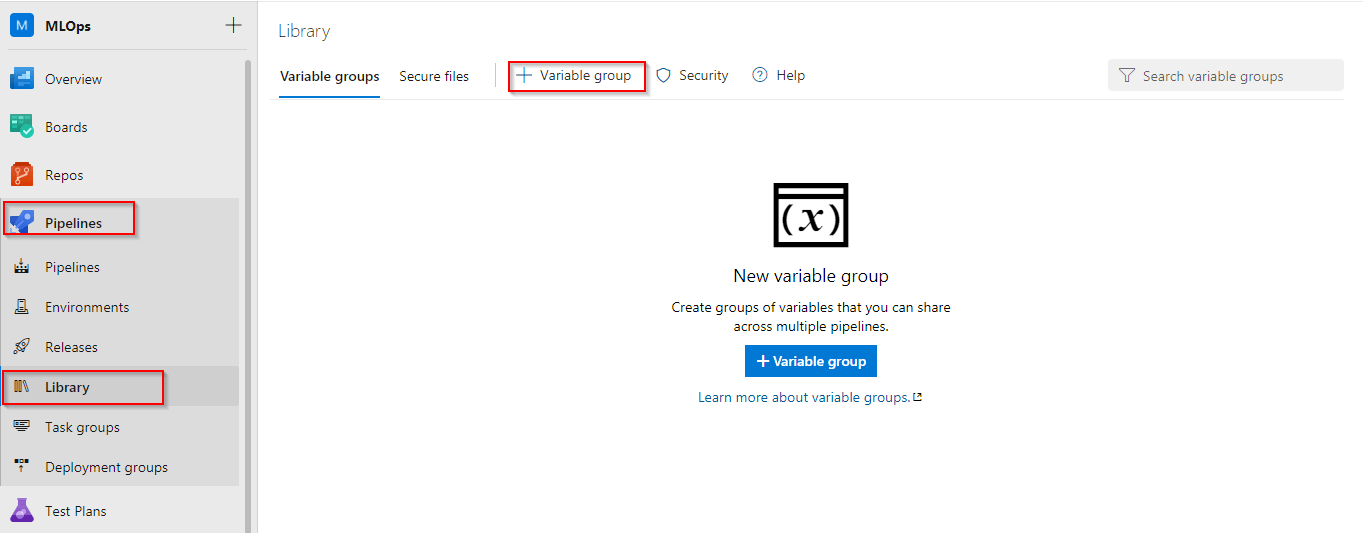

Step 5: Create a Variable Group for the pipeline. Select the Pipelines Option and click on Library to create a variable group.

Variable Groups are used to store values that are to be made available across multiple pipelines and can be controlled by the developer.

Note: You can give the same name for the variable group as mentioned in the document also.

Check Out: Our blog post on Azure Speech Translation. Click here

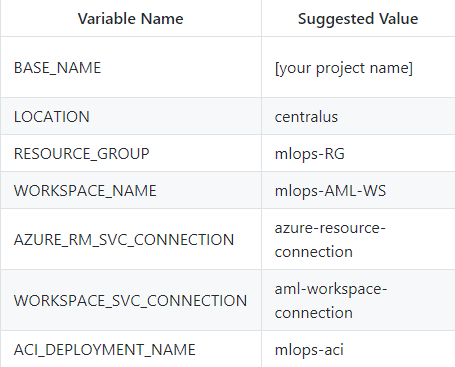

Step 6: Now we have to add some variables in the variable group created in the previous step. So click on the Add button and add these variables.

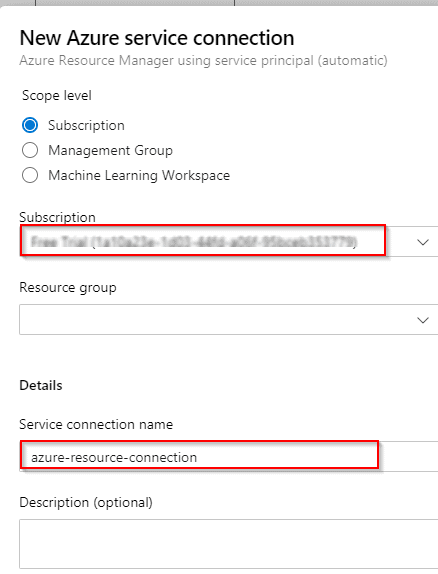

Step 7: No we have to create a service connection for Azure Resource Manager. So click on Project Settings and select Service Connections.

- Choose a Service Connection: Azure Resource Manager

- Authentication Method: Service Principal (automatic)

- Scope Level: Subscription (Your subscription will be populated in the box)

- Service connection name: Give the name which you specified in the AZURE_RM_SVC_CONNECTION variable and click on save.

The Connection will be created and can be viewed in the Azure Portal under Azure Active Directory Tab.

Also Read: Our blog post on DP 100 Exam: Everything you need to know before giving this exam.

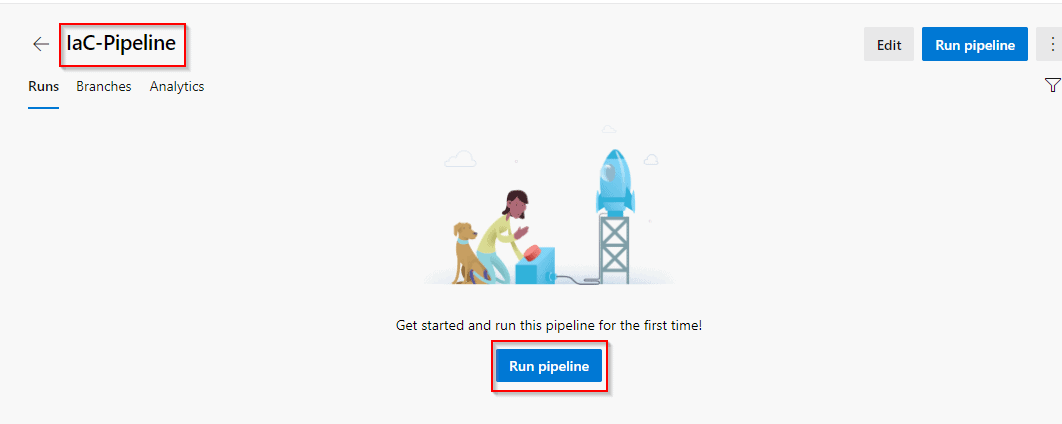

Step 8: Create Infrastructure as Code (IaC) Pipeline by clicking on Pipelines and selecting New Pipeline.

- It will ask for the code location, select GitHub.

- From where to Configure- Existing Azure Pipelines YAML File

- Specify the path and click on Save

You can Review the created pipeline and also rename it as IaC Pipeline for better understanding in the future. Once the pipeline is created click on Run.

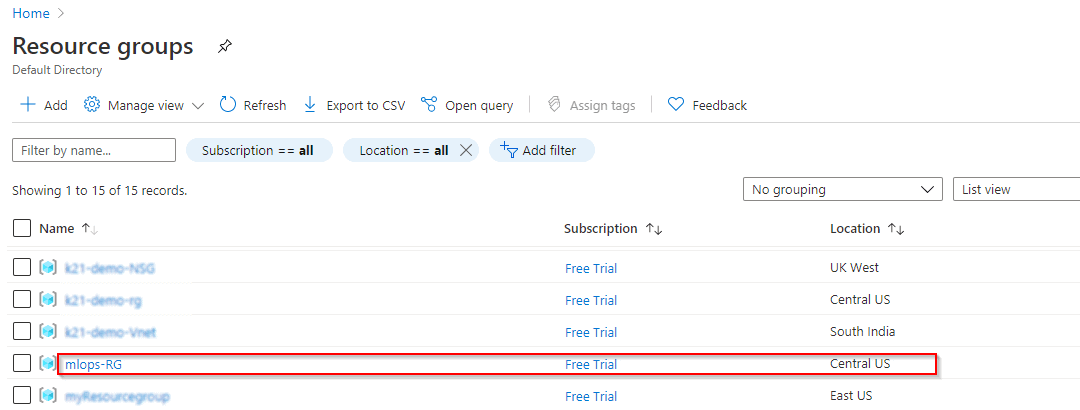

Step 9: After the pipeline starts running, you can see in the Azure Portal that a new resource group will be created with a machine learning service workspace.

Also Read: Our previous blog post on hyperparameter tuning. Click here

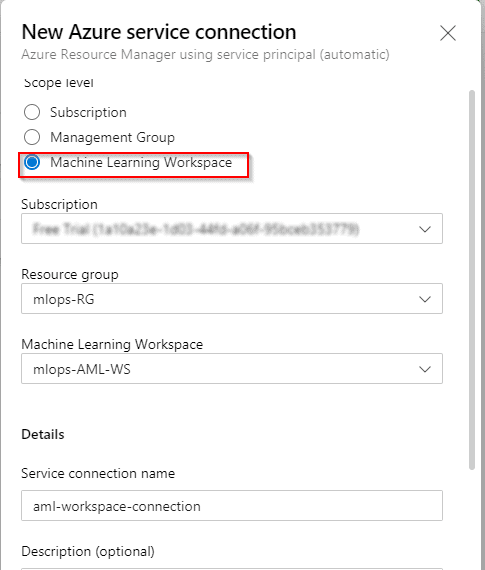

Step 10: Now the next step will be to connect Azure DevOps Service to Azure ML Workspace.

For this, we will create a new service connection with the scope of the Machine Learning Workspace.

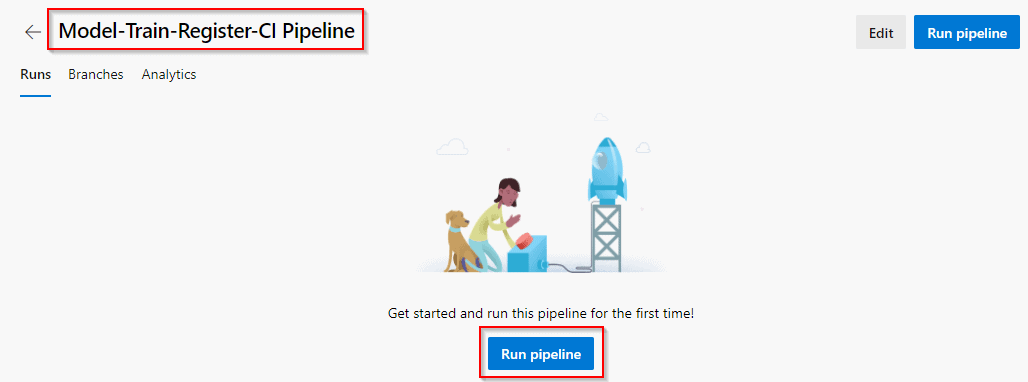

Step 11: After the connection is established, we will now create a Model, Train, and Register CI Pipeline and run that (It will take approximately 20 minutes to run this pipeline).

Step 12: After the pipeline run is completed you can view the workspace details in your azure portal in the model and pipeline section.

Also Read: Our blog post on AWS Sagemaker. Click here

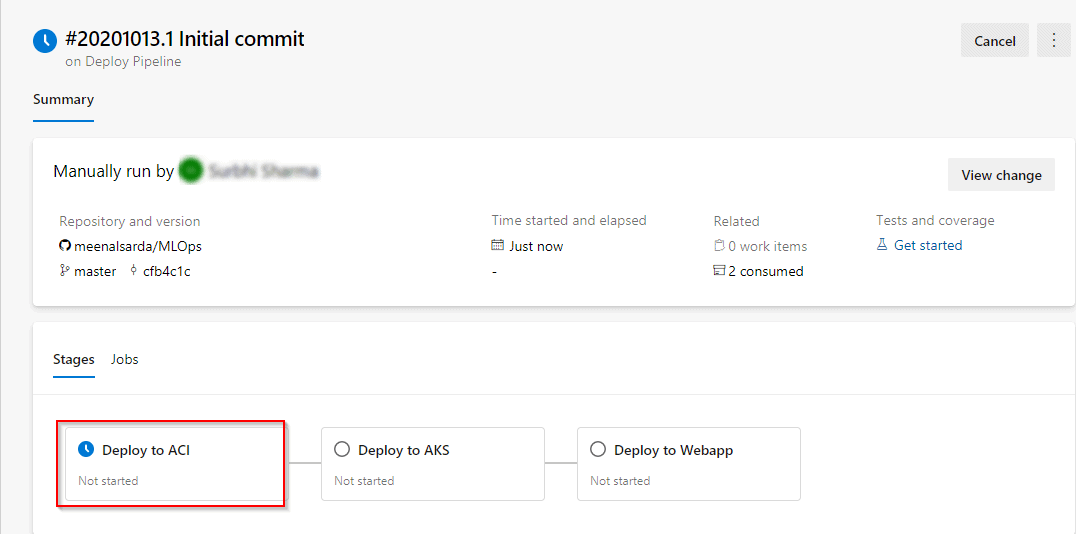

Step 13: If you want to deploy the model, then create another pipeline and run the pipeline. There will be three deployment environments Deploy to ACI or AKS or Webapp.

So this is how we can get MLOpsPython working with some sample projects.

Related/References:

- [DP-100] Microsoft Certified Azure Data Scientist Associate: Everything you must know

- Microsoft Certified Azure Data Scientist Associate | DP 100 | Step By Step Activity Guides (Hands-On Labs)

- [AI-900] Microsoft Certified Azure AI Fundamentals Course: Everything you must know

- Azure Machine Learning Service Workflow: Overview For Beginners

- How To Deploy Azure Machine Learning Model In Production

- [AI-900] Azure Machine Learning Studio

- What Is DevOps?

Next Task For You

We cover MLOps in our DP-100 training where we cover topics like what is Machine learning operations, MLOps pipelines, and more.

To know more about AI, ML, Data Science for beginners, why you should learn, Job opportunities, and what to study Including Hands-On labs you must perform to clear [DP-100] Microsoft Azure Data Scientist Associate register for our FREE CLASS Now!

The post Machine Learning Operations (MLOps) On Azure appeared first on Cloud Training Program.